the bible,truth,God's kingdom,JEHOVAH God,New World,JEHOVAH Witnesses,God's church,Christianity,apologetics,spirituality.

Friday, 8 April 2022

Addressing Darwinist just so stories on eye evolution.

More Implausible Stories about Eye Evolution

Recently an email correspondent asked me about a clip from Neil deGrasse Tyson’s reboot of Cosmos where he claims that eyes could have evolved via unguided mutations. Even though the series is now eight years old, it’s still promoting implausible stories about eye evolution. Clearly, despite having been addressed by proponents of intelligent design many times over, this issue is not going away. Let’s revisit the question, as Tyson and others have handled it.

In the clip, Tyson claims that the eye is easily evolvable by natural selection and it all started when some “microscopic copying error” created a light-sensitive protein for a lucky bacterium. But there’s a problem: Creating a light-sensitive protein wouldn’t help the bacterium see anything. Why? Because seeing requires circuitry or some kind of a visual processing pathway to interpret the signal and trigger the appropriate response. That’s the problem with evolving vision — you can’t just have the photon collectors. You need the photon collectors, the visual processing system, and the response-triggering system. At the very least three systems are required for vision to give you a selective advantage. It would be prohibitively unlikely for such a set of complex coordinated systems to evolve by stepwise mutations and natural selection.

A “Masterpiece” of Complexity

Tyson calls the human eye a “masterpiece” of complexity, and claims it “poses no challenge to evolution by natural selection.” But do we really know this is true?

Darwinian evolution tends to work fine when one small change or mutation provides a selective advantage, or as Darwin put it, when an organ can evolve via “numerous, successive, slight modifications.” If a structure cannot evolve via “numerous, successive, slight modifications,” Darwin said, his theory “would absolutely break down.” Writing in The New Republic some years ago, evolutionist Jerry Coyne essentially concurred on that: “It is indeed true that natural selection cannot build any feature in which intermediate steps do not confer a net benefit on the organism.” So are there structures that would require multiple steps to provide an advantage, where intermediate steps might not confer a net benefit on the organism? If you listen to Tyson’s argument carefully, I think he let slip that there are.

Tyson says that “a microscopic copying error” gave a protein the ability to be sensitive to light. He doesn’t explain how that happened. Indeed, biologist Sean B. Carroll cautions us to “not be fooled” by the “simple construction and appearance” of supposedly simple light-sensitive eyes, since they “are built with and use many of the ingredients used in fancier eyes.” Tyson doesn’t worry about explaining how any of those complex ingredients arose at the biochemical level. What’s more interesting is what Tyson says next: “Another mutation caused it [a bacterium with the light-sensitive protein] to flee intense light.”

An Interesting Question

It’s nice to have a light-sensitive protein, but unless the sensitivity to light is linked to some behavioral response, then how would the sensitivity provide any advantage? Only once a behavioral response also evolved — say, to turn towards or away from the light — can the light-sensitive protein provide an advantage. So if a light-sensitive protein evolved, why did it persist until the behavioral response evolved as well? There’s no good answer to that question, because vision is fundamentally a multi-component, and thus a multi-mutation, feature. Multiple components — both visual apparatus and the encoded behavioral response — are necessary for vision to provide an advantage. It’s likely that these components would require many mutations. Thus, we have a trait where an intermediate stage — say, a light-sensitive protein all by itself — would not confer a net advantage on the organism. This is where Darwinian evolution tends to get stuck.

Tyson seemingly assumes those subsystems were in place, and claims that a multicell animal might then evolve a more complex eye in a stepwise fashion. He says the first step is that a “dimple” arises which provides a “tremendous advantage,” and that dimple then “deepens” to improve visual acuity. A pupil-type structure then evolves to sharpen the focus, but this results in less light being let in. Next, a lens evolves to provide “both brightness and sharp focus.” This is the standard account of eye evolution that I and others have critiqued before. Francis Collins and Karl Giberson, for example, have made a similar set of arguments.

Such accounts invoke the abrupt appearance of key features of advanced eyes including the lens, cornea, and iris. The presence of each of these features — fully formed and intact — would undoubtedly increase visual acuity. But where did the parts suddenly come from in the first place? As Scott Gilbert of Swarthmore College put it, such evolutionary accounts are “good at modelling the survival of the fittest, but not the arrival of the fittest.”

Hyper-Simplistic Accounts

As a further example of these hyper-simplistic accounts of eye evolution, Francisco Ayala in his book Darwin’s Gift to Science and Religion asserts, “Further steps — the deposition of pigment around the spot, configuration of cells into a cuplike shape, thickening of the epidermis leading to the development of a lens, development of muscles to move the eyes and nerves to transmit optical signals to the brain — gradually led to the highly developed eyes of vertebrates and cephalopods (octopuses and squids) and to the compound eyes of insects.” (p. 146)

Ayala’s explanation is vague and shows no appreciation for the biochemical complexity of these visual organs. Thus, regarding the configuration of cells into a cuplike shape, biologist Michael Behe asks (in responding to Richard Dawkins on the same point):

And where did the “little cup” come from? A ball of cells–from which the cup must be made–will tend to be rounded unless held in the correct shape by molecular supports. In fact, there are dozens of complex proteins involved in maintaining cell shape, and dozens more that control extracellular structure; in their absence, cells take on the shape of so many soap bubbles. Do these structures represent single-step mutations? Dawkins did not tell us how the apparently simple “cup” shape came to be.

Michael J. Behe, Darwin’s Black Box: The Biochemical Challenge to Evolution, p. 15 (Free Press, 1996)

An Integrated System

Likewise, mathematician and philosopher David Berlinski has assessed the alleged “intermediates” for the evolution of the eye. He observes that the transmission of data signals from the eye to a central nervous system for data processing, which can then output some behavioral response, comprises an integrated system that is not amenable to stepwise evolution:

Light strikes the eye in the form of photons, but the optic nerve conveys electrical impulses to the brain. Acting as a sophisticated transducer, the eye must mediate between two different physical signals. The retinal cells that figure in Dawkins’ account are connected to horizontal cells; these shuttle information laterally between photoreceptors in order to smooth the visual signal. Amacrine cells act to filter the signal. Bipolar cells convey visual information further to ganglion cells, which in turn conduct information to the optic nerve. The system gives every indication of being tightly integrated, its parts mutually dependent.

The very problem that Darwin’s theory was designed to evade now reappears. Like vibrations passing through a spider’s web, changes to any part of the eye, if they are to improve vision, must bring about changes throughout the optical system. Without a correlative increase in the size and complexity of the optic nerve, an increase in the number of photoreceptive membranes can have no effect. A change in the optic nerve must in turn induce corresponding neurological changes in the brain. If these changes come about simultaneously, it makes no sense to talk of a gradual ascent of Mount Improbable. If they do not come about simultaneously, it is not clear why they should come about at all.

The same problem reappears at the level of biochemistry. Dawkins has framed his discussion in terms of gross anatomy. Each anatomical change that he describes requires a number of coordinate biochemical steps. “[T]he anatomical steps and structures that Darwin thought were so simple,” the biochemist Mike Behe remarks in a provocative new book (Darwin’s Black Box), “actually involve staggeringly complicated biochemical processes.” A number of separate biochemical events are required simply to begin the process of curving a layer of proteins to form a lens. What initiates the sequence? How is it coordinated? And how controlled? On these absolutely fundamental matters, Dawkins has nothing whatsoever to say.

David Berlinski, “Keeping an Eye on Evolution: Richard Dawkins, a Relentless Darwinian Spear Carrier, Trips Over Mount Improbable,” Globe & Mail (November 2, 1996)

More or Less One Single Feature

In sum, standard accounts of eye evolution fail to explain the evolution of key eye features such as:

- The biochemical evolution of the fundamental ability to sense light

- The origin of the first “light-sensitive spot”

- The origin of neurological pathways to transmit the optical signal to a brain

- The origin of a behavioral response to allow the sensing of light to give some behavioral advantage to the organism

- The origin of the lens, cornea, and iris in vertebrates

- The origin of the compound eye in arthropods

At most, accounts of the evolution of the eye provide a stepwise explanation of “fine gradations” for the origin of more or less one single feature: the increased concavity of eye shape. That does not explain the origin of the eye. But from Neil Tyson and the others, you’d never know that.

Against the stigma of the skilled trades.

The Stigma of Choosing Trade School Over College

When college is held up as the one true path to success, parents—especially highly educated ones—might worry when their children opt for vocational school instead.

Toren Reesman knew from a young age that he and his brothers were expected to attend college and obtain a high-level degree. As a radiologist—a profession that requires 12 years of schooling—his father made clear what he wanted for his boys: “Keep your grades up, get into a good college, get a good degree,” as Reesman recalls it. Of the four Reesman children, one brother has followed this path so far, going to school for dentistry. Reesman attempted to meet this expectation, as well. He enrolled in college after graduating from high school. With his good grades, he got into West Virginia University—but he began his freshman year with dread. He had spent his summers in high school working for his pastor at a custom-cabinetry company. He looked forward each year to honing his woodworking skills, and took joy in creating beautiful things. School did not excite him in the same way. After his first year of college, he decided not to return.

He says pursuing custom woodworking as his lifelong trade was disappointing to his father, but Reesman stood firm in his decision, and became a cabinetmaker. He says his father is now proud and supportive, but breaking with family expectations in order to pursue his passion was a difficult choice for Reesman—one that many young people are facing in the changing job market.

Traditional-college enrollment rates in the United States have risen this century, from 13.2 million students enrolled in 2000 to 16.9 million students in 2016. This is an increase of 28 percent, according to the National Center for Education Statistics. Meanwhile, trade-school enrollment has also risen, from 9.6 million students in 1999 to 16 million in 2014. This resurgence came after a decline in vocational education in the 1980s and ’90s. That dip created a shortage of skilled workers and tradespeople.

Many jobs now require specialized training in technology that bachelor’s programs are usually too broad to address, leading to more “last mile”–type vocational-education programs after the completion of a degree. Programs such as Galvanize aim to teach specific software and coding skills; Always Hired offers a “tech-sales bootcamp” to graduates. The manufacturing, infrastructure, and transportation fields are all expected to grow in the coming years—and many of those jobs likely won’t require a four-year degree.

/media/img/posts/2019/03/Screen_Shot_2019_03_06_at_11.54.12_AM/original.png)

This shift in the job and education markets can leave parents feeling unsure about the career path their children choose to pursue. Lack of knowledge and misconceptions about the trades can lead parents to steer their kids away from these programs, when vocational training might be a surer path to a stable job.

Raised in a family of truck drivers, farmers, and office workers, Erin Funk was the first in her family to attend college, obtaining a master’s in education and going on to teach second grade for two decades. Her husband, Caleb, is a first-generation college graduate in his family, as well. He first went to trade school, graduating in 1997, and later decided to strengthen his résumé following the Great Recession. He began his bachelor’s degree in 2009, finishing in 2016. The Funks now live in Toledo, Ohio, and have a 16-year-old son, a senior in high school, who is already enrolled in vocational school for the 2019–20 school year. The idea that their son might not attend a traditional college worried Erin and Caleb at first. “Vocational schools where we grew up seemed to be reserved for people who weren’t making it in ‘real’ school, so we weren’t completely sure how we felt about our son attending one,” Erin says. Both Erin and Caleb worked hard to be the first in their families to obtain college degrees, and wanted the same opportunity for their three children. After touring the video-production-design program at Penta Career Center, though, they could see the draw for their son. Despite their initial misgivings, after learning more about the program and seeing how excited their son was about it, they’ve thrown their support behind his decision.

But not everyone in the Funks’ lives understands this decision. Erin says she ran into a friend recently, and “as we were catching up, I mentioned that my eldest had decided to go to the vocational-technical school in our city. Her first reaction was, ‘Oh, is he having problems at school?’ I am finding as I talk about this that there is an attitude out there that the only reason you would go to a vo-tech is if there’s some kind of problem at a traditional school.” The Funks’ son has a 3.95 GPA. He was simply more interested in the program at Penta Career Center. “He just doesn’t care what anyone thinks,” his mom says.

The Funks are not alone in their initial gut reaction to the idea of vocational and technical education. Negative attitudes and misconceptions persist even in the face of the positive statistical outlook for the job market for these middle-skill careers. “It is considered a second choice, second-class. We really need to change how people see vocational and technical education,” Patricia Hsieh, the president of a community college in the San Diego area, said in a speech at the 2017 conference for the American Association of Community Colleges. European nations prioritize vocational training for many students, with half of secondary students (the equivalent of U.S. high-school students) participating in vocational programs. In the United States, since the passage of the 1944 GI Bill, college has been pushed over vocational education. This college-for-all narrative has been emphasized for decades as the pathway to success and stability; parents might worry about the future of their children who choose a different path.

Read more: The world might be better off without college for everyone

Dennis Deslippe and Alison Kibler are both college professors at Franklin and Marshall College in Lancaster, Pennsylvania, so it was a mental shift for them when, after high school, their son John chose to attend the masonry program at Thaddeus Stevens College of Technology, a two-year accredited technical school. John was always interested in working with his hands, Deslippe and Kibler say—building, creating, and repairing, all things that his academic parents are not good at, by their own confession.

Deslippe explains, “One gap between us as professor parents and John’s experience is that we do not really understand how Thaddeus Stevens works in the same way that we understand a liberal-arts college or university. We don’t have much advice to give. Initially, we needed some clarity about what masonry exactly was. Does it include pouring concrete, for example?” (Since their son is studying brick masonry, his training will likely not include concrete work.) Deslippe’s grandfather was a painter, and Kibler’s grandfather was a woodworker, but three of their four parents were college grads. “It’s been a long-standing idea that the next generation goes to college and moves out of ‘working with your hands,’” Kibler muses. “Perhaps we are in an era where that formula of rising out of trades through education doesn’t make sense?”

College doesn’t make sense is the message that many trade schools and apprenticeship programs are using to entice new students. What specifically doesn’t make sense, they claim, is the amount of debt many young Americans take on to chase those coveted bachelor’s degrees. There is $1.5 trillion in student debt outstanding as of 2018, according to the Federal Reserve. Four in 10 adults under the age of 30 have student-loan debt, according to the Pew Research Center. Master’s and doctorate degrees often lead to even more debt. Earning potential does not always offset the cost of these loans, and only two-thirds of those with degrees think that the debt was worth it for the education they received. Vocational and technical education tends to cost significantly less than a traditional four-year degree.

This stability is appealing to Marsha Landis, who lives with her cabinetmaker husband and two children outside of Jackson Hole, Wyoming. Landis has a four-year degree from a liberal-arts college, and when she met her husband while living in Washington, D.C., she found his profession to be a refreshing change from the typical men she met in the Capitol Hill dating scene. “He could work with his hands, create,” she says. “He wasn’t pretentious and wrapped up in the idea of degrees. And he came to the marriage with no debt and a marketable skill, something that has benefited our family in huge ways.” She says that she has seen debt sink many of their friends, and that she would support their children if they wanted to pursue a trade like their father.

In the United States, college has been painted as the pathway to success for generations, and it can be, for many. Many people who graduate from college make more money than those who do not. But the rigidity of this narrative could lead parents and students alike to be shortsighted as they plan for their future careers. Yes, many college graduates make more money—but less than half of students finish the degrees they start. This number drops as low as 10 percent for students in poverty. The ever sought-after college-acceptance letter isn’t a guarantee of a stable future if students aren’t given the support they need to complete a degree. If students are exposed to the possibility of vocational training early on, that might help remove some of the stigma, and help students and parents alike see a variety of paths to a successful future.

On the right to conscientious objection to military service.

About conscientious objection to military service and human rights

The right to conscientious objection to military service is based on article 18 of the International Covenant on Civil and Political Rights, which guarantees the right to freedom of thought, conscience and religion or belief. While the Covenant does not explicitly refer to a right to conscientious objection, in its general comment No. 22 (1993) the Human Rights Committee stated that such a right could be derived from article 18, inasmuch as the obligation to use lethal force might seriously conflict with the freedom of conscience and the right to manifest one’s religion or belief.

The Human Rights Council, and previously the Commission on Human Rights, have also recognized the right of everyone to have conscientious objection to military service as a legitimate exercise of the right to freedom of thought, conscience and religion, as laid down in article 18 of the Universal Declaration of Human Rights and article 18 of the International Covenant on Civil and Political Rights (see their resolutions which were adopted without a vote in 1989, 1991, 1993, 1995, 1998, 2000, 2002, 2004, 2012, 2013 and 2017).

OHCHR’s work on conscientious objection to military service

OHCHR has a mandate to promote and protect the effective enjoyment by all of all civil, cultural, economic, political and social rights, as well as to make recommendations with a view to improving the promotion and protection of all human rights. The High Commissioner for Human Rights has submitted thematic reports on conscientious objection to military service both to the Commission on Human Rights (in 2004 and 2006) and to the Human Rights Council (in 2007, 2008, 2013, 2017 and 2019). The latest report (A/HRC/41/23, para. 60) stresses that application procedures for obtaining the status of conscientious objector to military service should comply, as a minimum, with the following criteria:

- Availability of information

- Cost-free access to application procedures

- Availability of the application procedure to all persons affected by military service

- Recognition of selective conscientious objection

- Non-discrimination on the basis of the grounds for conscientious objection and between groups

- No time limit on applications

- Independence and impartiality of the decision-making process

- Good faith determination process

- Timeliness of decision-making and status pending determination

- Right to appeal

- Compatibility of alternative service with the reasons for conscientious objection

- Non-punitive conditions and duration of alternative service

- Freedom of expression for conscientious objectors and those supporting them.

On language and the tyranny of authority.

Why Words Matter: Sense and Nonsense in Science

Editor’s note: We are delighted to present a new series by Neil Thomas, Reader Emeritus at the University of Durham, “Why Words Matter: Sense and Nonsense in Science.” This is the first article in the series. Professor Thomas’s recent book is Taking Leave of Darwin: A Longtime Agnostic Discovers the Case for Design (Discovery Institute Press).

My professional background in European languages and linguistics has given me some idea of how easy it is for people in all ages and cultures to create neologisms or ad hoc linguistic formulations for a whole variety of vague ideas and fancies. In fact, it seems all too easy to fashion words to cover any number of purely abstract, at times even chimerical notions, the more convincingly (for the uncritical) if one chooses to append the honorific title of “science” to one’s subjective thought experiments.

One can for instance, if so inclined, muse with Epicurus, Lucretius, and David Hume that the world “evolved” by chance collocations of atoms and then proceed to dignify one’s notion by dubbing it “the theory of atomism.” Or one can with Stephen Hawking, Lawrence Krauss, and Peter Atkins1 conclude that the universe and all within it arose spontaneously from “natural law.” But in all these cases we have to be willing to ignore the fact that such theories involve what is known grammatically as the “suppression of the agent.” This means the failure to specify who the agent/legislator might be — this being the sort of vagueness which we were taught to avoid in school English lessons. A mundane example of this suppression of the agent is the criminal’s perennial excuse, “The gun just went off in my hand, officer, honest.”

A Universe by an “Agentless Act”

As I have pointed out before,2 it is both grammatical solecism and logical impossibility to contend with Peter Atkins that the universe arose through an “agentless act” since this would imply some form of pure automatism or magical instrumentality quite outside common experience or observability. In a similar vein one might, with Charles Darwin, theorize that the development of the biosphere was simply down to that empirically unattested sub-variant of chance he chose to term natural selection.3 Since no empirical evidence exists for any of the above conjectures, they must inevitably remain terms without referents or, to use the mot juste from linguistics, empty signifiers.

Empty Signifiers in Science

Many terms we use in everyday life are, and are widely acknowledged to be, notional rather than factual. The man on the moon and the fabled treasure at the end of the rainbow are trivial examples of what are sometimes termed “airy nothings.” These are factually baseless terms existing “on paper” but without any proper referent in the real world because no such referent exists. Nobody of course is misled by light-hearted façons de parler widely understood to be only imaginary, but real dangers for intellectual clarity arise when a notional term is mistaken for reality.

One famous historical example of such a term was the substance dubbed phlogiston, postulated in the 1660s as a fire-like substance inhering in all combustible bodies; but such a substance was proved not to exist and to be merely what we would now rightly term pseudo-science just over a century later by the French scientist Antoine Lavoisier. Or again in more recent times there is that entirely apocryphal entity dubbed “ectoplasm.” This was claimed by Victorian spiritualists to denote a substance supposedly exuded from a “medium” (see the photo above) which represented the materialization of a spiritual force once existing in a now deceased human body. Needless to say, the term “ectoplasm” is now treated with unqualified skepticism.

Next, “The Man on the Moon and Martian Canals.”

Notes

- Stephen Hawking and Leonard Mlodinov, The Grand Design: New Answers to the Ultimate Questions of Life (London: Bantam, 2011); P. W. Atkins, Creation Revisited (Oxford and New York: Freeman, 1992); Lawrence Krauss, A Universe from Nothing (London: Simon and Schuster, 2012).

- See Neil Thomas, Taking Leave of Darwin (Seattle: Discovery, 2021), p. 110, where I point out how that expression is a contradiction in terms.

- Darwin in later life, stung that many friends thought he was all but deifying natural selection, came to concede that natural preservation might

have been the more accurate term to use — but of course that opens up

the huge problem of how organic innovation (the microbes-to-man

conjecture) can be defended in reference to a process which simply

preserved and had no productive or creative input.

- PS. Works for theology too.

A civilisation beneath our feet and the design debate.

To Regulate Foraging, Harvester Ants Use a (Designed) Feedback Control Algorithm

A recent study in the Journal of the Royal Society Interface reports on “A feedback control principle common to several biological and engineered systems.” The researchers, Jonathan Y. Suen and Saket Navlakha, show how harvester ants (Pogonomyrmex barbatus) use a feedback control algorithm to regulate foraging behavior. As Science Daily notes, the study determined that, “Ants and other natural systems use optimization algorithms similar to those used by engineered systems, including the Internet.”

The ants forage for seeds that are widely scattered and usually do not occur in concentrated patches. Foragers usually continue their search until they find a seed. The return rate of foragers corresponds to the availability of seeds: the more food is available, the less time foragers spend searching. When the ants successfully find food, they return to the nest in approximately one third of the search time compared to ants unable to find food. There are several aspects of this behavior that point to intelligent design.

Feedback Control

First, it is based on the general engineering concept of a feedback control system. Such systems use the output of a system to make adjustments to a control mechanism and maintain a desired setting. A common example is the temperature control of heating and air conditioning systems. An analogy in biology is homeostasis, which uses negative feedback, and is designed to maintain a constant body temperature.

Mathematical Algorithm

A second aspect of design is the algorithm used to implement the specific control mechanism. Suen and Navlaka describe the system as “multiplicative-increase multiplicative-decrease” (MIMD). The MIMD closed loop system is a hybrid combination of positive and negative feedback. Receiving positive feedback results in multiplying the response, while negative feedback results in reducing the response by a constant value. The purpose relates to the challenge of optimizing ant foraging. As the paper explains:

If foraging rates exceed the rate at which food becomes available, then many ants would return “empty-handed,” resulting in little or no net gain in colony resources. If foraging rates are lower than the food availability rate, then seeds would be left in the environment uncollected, meaning the seeds would either be lost to other colonies or be removed by wind and rain.

The authors found that positive feedback systems are “used to achieve multiple goals, including efficient allocation of available resources, the fair or competitive splitting of those resources, minimization of response latency, and the ability to detect feedback failures.” However, positive control feedback systems are susceptible to instability (think of the annoying screech when there is feedback into microphones in a sound system). Therefore, a challenge for MIMD systems is to minimize instability.

In this application, when foraging times are short, the feedback is positive, resulting in a faster increase in the number of foragers. When foraging times are longer, the feedback is negative, resulting in a reduction in the number of foragers. A mathematical model of the behavior has confirmed that the control algorithm is largely optimized. (See Prabhakar et al., “The Regulation of Ant Colony Foraging Activity without Spatial Information,” PLOS Computational Biology, 2012.) As I describe in my recent book, Animal Algorithms, the harvester ant algorithm is just one example of behavior algorithms that ants and other social insects employ.

Suen and Navlakha point out that the mechanism is similar to that employed to regulate traffic on the Internet. In the latter context, there are billions of “agents” continuously transmitting data. Algorithms are employed to control and optimize traffic flow. The challenge for Internet operations is to maximize capacity and allow for relatively equal access for users. Obviously, Internet network control is designed by intelligent engineers. In contrast, the harvester ant behavior is carried out by individuals without any central control mechanism.

Physical Sensors

A third feature indicating design is the physical mechanism used by the ants to determine how long returning foragers have been out. When ants forage for food, molecules called cuticular hydrocarbons change based on the amount of time spent foraging. This is due to the difference in temperature and humidity outside of the nest. As the ants return to the entrance of the nest, there are interactions between the returning and the outgoing ants via their antennae. These interactions enable detection of the hydrocarbons, which provide a mechanism to enable outgoing ants to determine the amount of time that returning ants spent foraging.

These three elements of harvester ant behavior (feedback control, mathematical algorithm, and physical sensors) present a severe challenge for the evolutionary paradigm. From a Darwinian perspective, they must have arisen through a combination of random mutations and natural selection. A much more plausible explanation is that they are evidence of intelligent design.

Christendom's role in the war on logic and commonsense.

Hosea11:9KJV"I will not execute the fierceness of mine anger, I will not return to destroy Ephraim: for I am God, and not man; the Holy One in the midst of thee: and I will not enter into the city."

Having rejected the immutability of the most fundamental binary of all (i.e that between creator and creature) with their nonsensical God-man hypothesis. Why are so many of Christendom's clerics puzzled that many of their flock find no issue with rejecting the immutability of the far less fundamental gender binary?

You say that Darwinism invokes the free lunch fallacy, defies mathematical falsification and further more is a clear violation of occam's razor? Tell us about it trinitarian?

If God can become man why can't the same sovereign power not make it possible for any chosen creature to become God?

I mean if God can be three and yet one with no contradiction he can be nine and yet three with no contradiction. Don't believe me? Consider.

Revelation1:4,5NASB"John to the seven churches that are in Asia:Grace and peace to you from Him who is and who was and who is to come,and from the seven spirits who are before His throne and from Jesus Christ..." Making a total of nine members of the multipersonal Godhead revealed in scripture but there is no principle in Christendom's philosophy that can be invoked to limit it to this figure. That's the thing with rejecting Commonsense as a principle once you are off the reservation all bets are off

Wednesday, 6 April 2022

"Jesus wept" but why?

John11:34,35KJV"And said, Where have ye laid him? They said unto him, Lord, come and see. 35Jesus wept."

Why these tears for a saint who finally received his reward? If Jesus and his followers honestly believed that Lazarus was in heaven joyfully cavorting with the angels and saints in the presence of JEHOVAH God himself, would they not have responded quite differently to news of his departure from this life.

John11:24KJV"Martha saith unto him, I know that he shall rise again in the resurrection at the last day. " Note Martha's actual hope for her brother though.

where would she have gotten such an idea?From her Lord perhaps?

John6:39KJV"And this is the Father's will which hath sent me, that of all which he hath given me I should lose nothing, but should raise it up again at the LAST DAY. "

No one goes to heaven when they die including Jesus himself. John20:17KJV"Jesus saith unto her, Touch me not; for I am not yet ascended to my Father: but go to my brethren, and say unto them, I ascend unto my Father, and your Father; and to my God, and your God."

Acts2:31KJV"He seeing this before spake of the resurrection of Christ, that his soul was not left in hell, neither his flesh did see corruption." Thus like everyone else Jesus went to hell(sheol) when he died.His hope was his God and Father just like the rest of us.Hebrews5:7KJV"Who in the days of his flesh, when he had offered up prayers and supplications with strong crying and tears unto him that was able to save him from death, and was heard in that he feared;"

John11:34KJV"And said, Where have ye laid him? ..." Note please our Lord did not ask where have you laid his body but where have you laid HIM. Third person singular referring to the person.obviously Lazarus was not in heaven.How could it be regarded as a kindness to recall anyone from the joy of heaven to the trials of this present age. Reject the mental contortions necessary to believe Christendom's falsehoods.

Well they do spend a lot of time in school.

Did Researchers Teach Fish to “Do Math”?

University of Bonn researchers think that they may have taught fish to count. They tested the fact that many life forms can note the difference in small quantities between “one more” and “one less,” at least up to five items, on fish. Not much work had been done on fish in this area so they decided to test eight freshwater stingrays and eight cichlids:

All of the fish were taught to recognize blue as corresponding to “more” and yellow to “less.” The fish or stingrays entered an experimental arena where they saw a test stimulus: a card showing a set of geometric shapes (square, circle, triangle) in either yellow or blue. In a separate compartment of the tank, the fish were then presented with a choice stimulus: two gates showing different numbers of shapes in the same color. When the fish were presented with blue shapes, they were supposed to swim toward the gate with one more shape than the test stimulus image. When presented yellow shapes, the animals were supposed to choose the gate with one less. Correct choices were rewarded with a food pellet. Three of the eight stingrays and six cichlids successfully learned to complete this task.

SOPHIE FESSL, “SCIENTISTS FIND THAT TWO SPECIES CAN BE TRAINED TO DISTINGUISH QUANTITIES THAT VARY BY ONE.” AT THE SCIENTIST(MARCH 31, 2022) THE PAPER IS OPEN ACCESS.

“The Problem Is the Interpretation”

But were the fish really counting?

Rafael Núñez, a cognitive scientist at the University of California, San Diego, who was not involved in the study, regards the study as “well conducted,” adding that “the problem is the interpretation.” For him, the paper provides information about what he termed “quantical cognition” — the ability to differentiate between quantities — in a 2017 paper. According to Núñez, arithmetic or counting doesn’t have to be invoked to explain the results in the present paper. “I could explain this result by . . . a fish or stingray having the perceptual ability to discriminate quantities: in this case, this will be to learn how to pick, in the case of blue, the most similar but more, and in the case of yellow, the most similar but less. There’s no arithmetic here, just more and less and similar.”

SOPHIE FESSL, “SCIENTISTS FIND THAT TWO SPECIES CAN BE TRAINED TO DISTINGUISH QUANTITIES THAT VARY BY ONE.” AT THE SCIENTIST(MARCH 31, 2022) THE PAPER IS OPEN ACCESS.

Infants, Fish, and Bees

The problem, as Núñez says, is with interpretation. Animal cognition researcher Silke Goebel points out that many life forms can distinguish between “more” and “less” in large numbers. Researchers have also found that, so far, infants, fish, and bees can recognize changes in number between 1 and 3. But they don’t get much beyond that.

To say seriously that fish “do math” would, of course, be misleading. Mathematics is an abstract enterprise. The same operations that work for single digits work for arbitrarily large numbers. It is possible to calculate using infinite (hyperreal) numbers. There are imaginary numbers,unexplained/unexplainable numbers, and at least one unknowable number. But we are stepping out into territory here that will not get a fish its food pellet.

Still, it’s a remarkable discovery that many life forms can manipulate quantities in a practical way. Here are some other recent highlights.

Read the rest at Mind Matters News, published by Discovery Institute’s Bradley Center for Natural and Artificial Intelligence.

Can this tree be re-planted?

Sara Walker and Her Crew Publish the Most Interesting Biology Paper of 2022 (So Far, Anyway)

We’ve just ended the first quarter of the year. It’s a long way to New Year’s Eve 2022. But this new open access paper from senior author Sara Walker (Arizona State) and her collaborators will be hard to top, in the “Wow, that is so interesting!” category. (The first author of this paper is Dylan Gagler, so we’ll refer to it as “Gagler et al. 2022” below.)

1. Back in the day, the best evidence for a single Tree of Life, rooted in the Last Universal Common Ancestor (LUCA), was the apparent biochemical and molecular universality of Earth life.

Leading neo-Darwinian Theodosius Dobzhansky expressed this point eloquently in his famous 1973 essay, “Nothing in biology makes sense except in the light of evolution”:

The unity of life is no less remarkable than its diversity…Not only is the DNA-RNA genetic code universal, but so is the method of translation of the sequences of the “letters” in DNA-RNA into sequences of amino acids in proteins. The same 20 amino acids compose countless different proteins in all, or at least in most, organisms. Different amino acids are coded by one to six nucleotide triplets in DNA and RNA. And the biochemical universals extend beyond the genetic code and its translation into proteins: striking uniformities prevail in the cellular metabolism of the most diverse living beings. Adenosine triphosphate, biotin, riboflavin, hemes, pyridoxin, vitamins K and B12, and folic acid implement metabolic processes everywhere. What do these biochemical or biologic universals mean? They suggest that life arose from inanimate matter only once and that all organisms, no matter now diverse, in other respects, conserve the basic features of the primordial life.[Emphasis added.]

For Dobzhansky, as for all neo-Darwinians (by definition), the apparent molecular universality of life on Earth confirmed Darwin’s prediction that all organisms “have descended from some one primordial form, into which life was first breathed” (1859, 494) — an entity now known as the Last Universal Common Ancestor, or LUCA. So strong is the pull of this apparent universality, rooted in LUCA, that any other historical geometry seems unimaginable.

The “Laws of Life”

Theoretician Sara Walker and her team of collaborators, however, are looking for an account of what they call (in Gagler et al. 2022) the “laws of life” that would apply “to all possible biochemistries” — including organisms found elsewhere in the universe, if any exist. To that end, they wanted to know if the molecular universality explained under neo-Darwinian theory as material descent from LUCA (a) really exists, and (b) if not, what patterns do exist, and how might those be explained without presupposing a single common ancestor.

And a single common ancestor, LUCA? That’s what they didn’t find.

2. Count up the different enzyme functions — and then map that number within the total functional space.

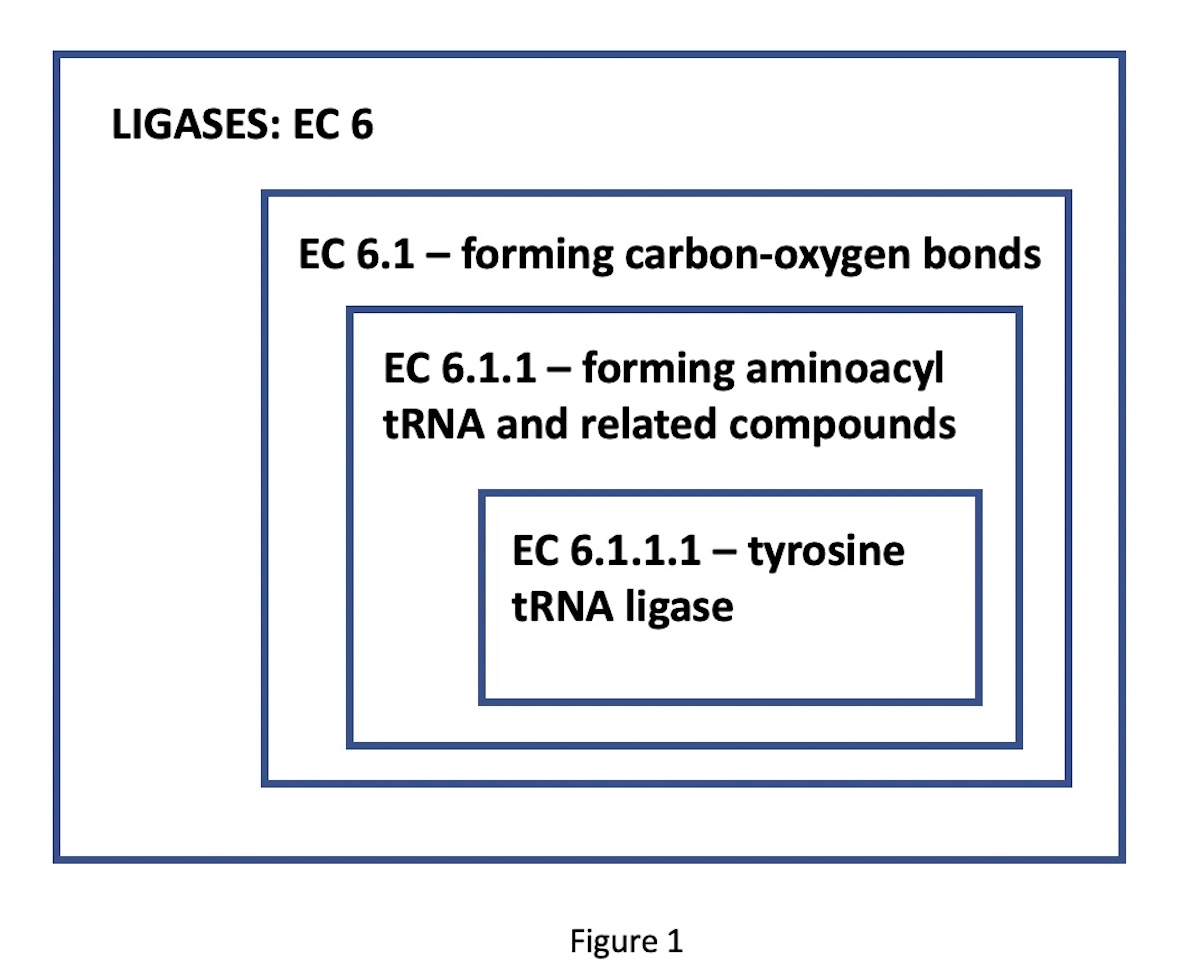

Many thousands of different enzyme functional classes, necessary for the living state, have been described and catalogued in the Enzyme Commission Classification, according to their designated EC numbers. These designators have four digits, corresponding to progressively more specific functional classes. For instance, consider the enzyme tyrosine-tRNA ligase. Its EC number, 6.1.1.1, indicates a nested set of classes: EC 6 comprises the ligases (bond-forming enzymes); EC 6.1, those ligases forming carbon-oxygen bonds; 6.1.1, ligases forming aminoacyl-tRNA and related compounds; finally, 6.1.1.1, the specific ligases forming tyrosine tRNA. (See Figure 1.)

The Main Takeaway from This Pattern?

Being a ligase — namely, an enzyme that forms bonds using ATP — entails belonging to a functional group, but not a group with material identity among its members. A rough parallel to a natural language such as English may be helpful. Suppose you wanted to express the idea of “darkness” or “darkened” (i.e., the relative absence of light). English supplies a wide range of synonyms for “darkened,” such as:

- murky

- shaded

- shadowed

- dimmed

- obscured

The same would be the case — the existence of a set of synonyms, i.e., words with the same general meaning, but not the same sequence identity — for any other idea. The concept of something being “blocked,” for instance, takes the synonyms:

- jammed

- occluded

- prevented

- obstructed

- hindered

While these words convey (approximately) the same meaning, and hence fall into the same semantic functional classes, they are not the same character strings. Their locations in an English dictionary, ordered by alphabet sequence, may be hundreds of pages apart. Moreover, as studied by the discipline of comparative philology, the historical roots of a word such as “hindered” will diverge radically from its functional synonyms, such as “blocked.” These two words, although semantically largely synonymous, enter English from originally divergent or unrelated antecedents — a character string gap still reflected by their very different spellings.

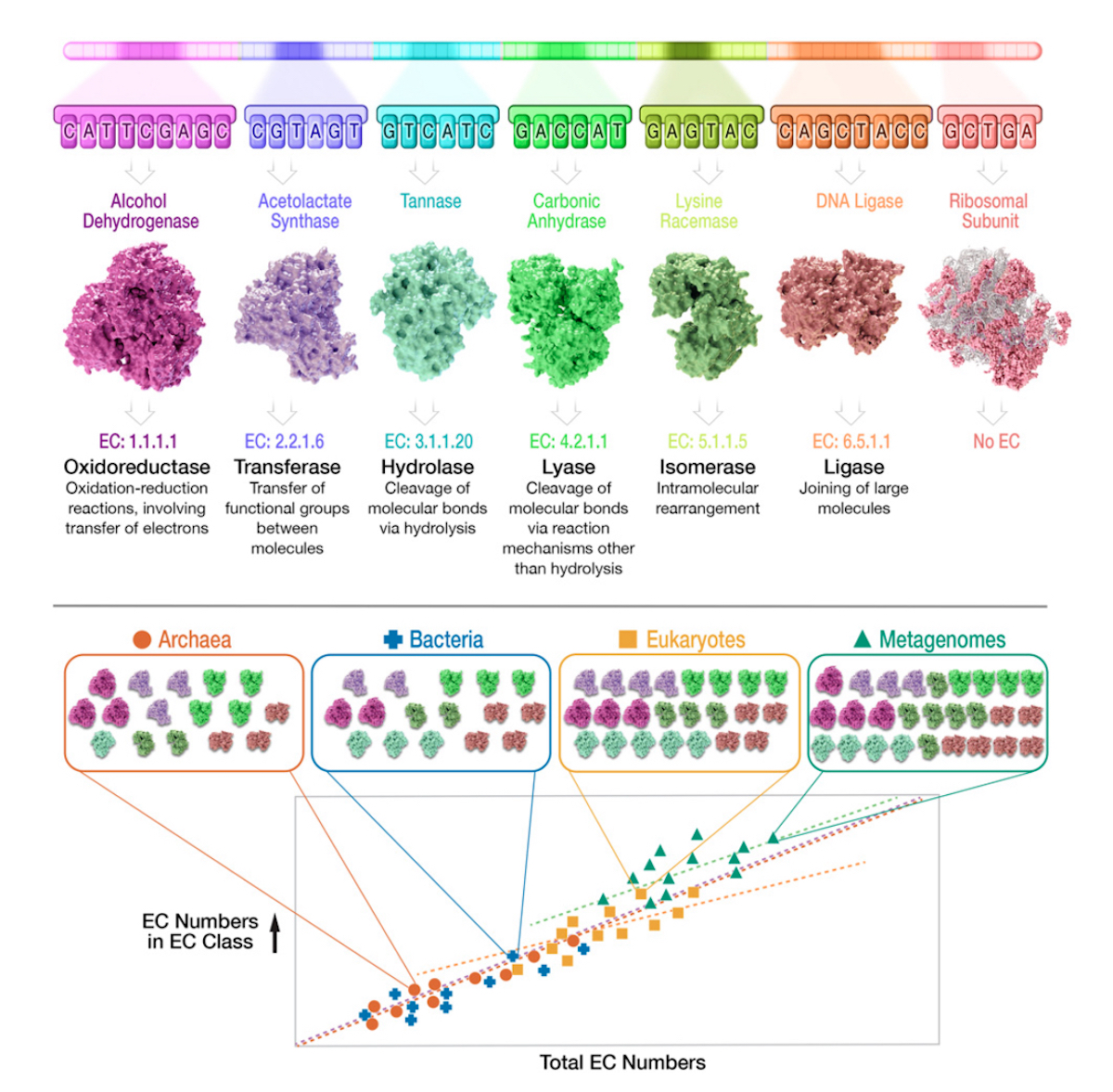

A strikingly similar pattern obtains with the critical (essential) components of all organisms. Gagler et al. 2022 looked at the abundances of enzyme functions across the three major domains of life (Bacteria, Archaea, Eukarya), as well as in metagenomes (environmentally sampled DNA). What they found was remarkable — a finding (see below) which may be easier for non-biological readers to understand via another analogy.

3. A segue into computer architectures — then back to enzymes.

The basic architecture of laptop computers includes components present in any such machine, defined by their functional roles:

- Central processing unit (CPU) — the primary logic operator

- Memory — storage of coded information

- Power supply — electrons (energy) needed for anything at all to be computed

And so on. (Although exploring this point in detail would take us far afield, it is worth noting that in 1936, when Alan Turing defined a universal computational machine, he did so with no idea about the arrival, decades down the road, of silicon-based integrated circuits, miniaturized transistors, motherboards, solid-state memory devices, or any of the rest of the material parts of computers now so familiar to us. Rather, his parts were functionally, not materially defined, as abstractions occupying the various roles those parts would play in the computational process — whatever their material instantiation would later turn out to be.) Now suppose we examined 100,000 laptops, randomly sampled from around the United States, to see what type of CPU — meaning which material part (e.g., built by which manufacturer) — each machine used as its primary logic operator.

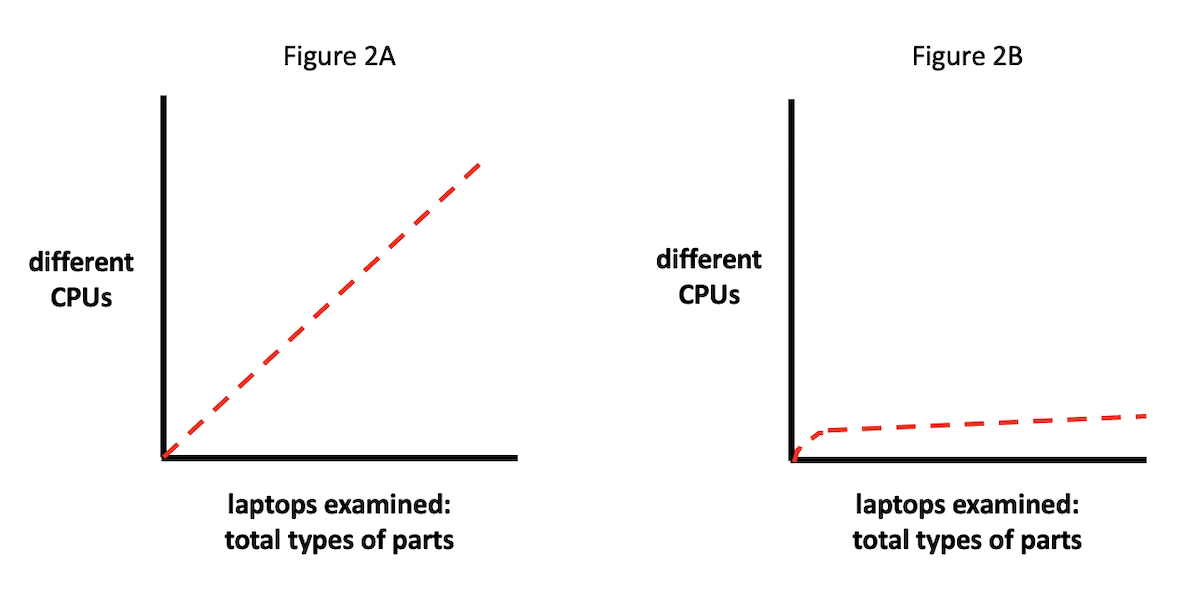

A range of outcomes is possible (see Figures 2A and 2B). For instance, if we plot CPUs from different manufacturers on the y axis, against the total number of laptop parts inspected on the x axis, it might be the case that the distribution of differently manufactured (i.e., materially distinct) CPUs would scale linearly with laptops inspected (Figure 2A). In other words, as our sample of inspected laptop parts grows, the number of different CPUs discovered would trend upwards correspondingly.

Or — and this fits, of course, with the actual situation we find (see Figure 2B) — most of the laptops would contain CPUs manufactured either by Intel or AMD. In this case, we would plot a line whose slope would change much more slowly, staying largely flat, in fact, after the CPUs from Intel and AMD were tallied.

The Core Rationale of Their Approach

Now consider Figure 3 (below), from the Gagler et al. 2022 paper. This shows the core rationale of their approach: tally the EC-classified enzyme “parts” within each of the major domains, and from metagenomes, and then plot that tally against the total EC numbers.

Figure 3 also shows their main finding. As the enzyme reaction space grows (on the horizontal axis — total EC numbers), so do the number of unique functions (on the vertical axis — EC numbers in each EC class).

The lesson that Gagler et al. 2022 draw from this discovery? The pattern is NOT due to material descent from a single common ancestor, LUCA. Indeed, under the heading, “Universality in Scaling of Enzyme Function Is Not Explained by Universally Shared Components,” they explain that material descent from LUCA would entail shared “microscale features,” meaning “specific molecules and reactions used by all life,” or “shared component chemistry across systems.” If we use the CPU / laptop analogy, this microscale commonality would be equivalent to finding CPUs from the same manufacturer, with the same internal logic circuits, in every laptop we examine.

But what Gagler et al. 2022 found was a macroscale pattern, “which does not directly correlate with a high degree of microscale universality,” and “cannot be explained directly by the universality of the underlying component functions.” In an accompanying news story, project co-author Chris Kempes, of the Santa Fe Institute, described their main finding in terms of functional synonyms: macroscale functions are required, but not the identical lower-level components:

“Here we find that you get these scaling relationships without needing to conserve exact membership. You need a certain number of transferases, but not particular transferases,” says SFI Professor Chris Kempes, a co-author on the paper. “There are a lot [of] ‘synonyms,’ and those synonyms scale in systematic ways.”

As Gagler et al. frame the point in the paper itself (emphasis added):

A critical question is whether the universality classes identified herein are a product of the shared ancestry of life. A limitation of the traditional view of biochemical universality is that universality can only be explained in terms of evolutionary contingency and shared history, which challenges our ability to generalize beyond the singular ancestry of life as we know it. …Instead, we showed here that universality classes are not directly correlated with component universality, which is indicative that it emerges as a macroscopic regularity in the large-scale statistics of catalytic functional diversity. Furthermore, EC universality cannot simply be explained due to phylogenetic relatedness since the range of total enzyme functions spans two orders of magnitude, evidencing a wide coverage of genomic diversity.

Sounds Like Intelligent Design

It is interesting to note that this paper was edited (for the PNAS) by Eugene Koonin of the National Center for Biotechnology Information. For many years, Koonin has argued in his own work that the putative “universality due to ancestry” premise of neo-Darwinian theory no longer holds, due in large measure to what he and others have termed “non-orthologous gene displacement” (NOGD). NOGD is a pervasive pattern of the use of functional synonyms — enzyme functions being carried out by different molecular actors — in different species. In 2016, Koonin wrote:

As the genome database grows, it is becoming clear that NOGD reaches across most of the functional systems and pathways such that there are very few functions that are truly “monomorphic”, i.e. represented by genes from the same orthologous lineage in all organisms that are endowed with these functions. Accordingly, the universal core of life has shrunk almost to the point of vanishing…there is no universal genetic core of life, owing to the (near) ubiquity of NOGD.

Universal functional requirements, but without the identity of material components — sounds like design.

Alas,OOL science just can't get a break

Origin of Life: The Problem of Cell Membranes

Wow, the new Long Story Short video is out now, and I think it’s the best one yet — it’s amazingly clear and quite funny. You’ll want to share it with friends. Some past entries in the series have considered the problems associated with chemical evolution, or abiogenesis, how life could have emerged from non-life on the early Earth without guidance or design. The new video examines cell membranes, which some might imagine as little more than a soap bubble or an elastic balloon. This is VERY far from the case.

To keep the cell alive, there’s an astonishing number of complex and contradictory things a cell membrane needs to do. If unassisted by intelligent design, how did the very first cell manage these tricks? It’s a puzzle, since “The membrane had to be extremely complex from the very BEGINNING, or life could never begin.” Some materialists have an answer: protocells, a simpler version of the simplest cells we know of today. But, asks Long Story, could a necessarily fragile, simpler cell survive without assistance from its environment, something like a hospital ICU? It seems not. If so, that makes any unguided scenario of abiogenesis a non-starter. We’ll have more to say in coming days about the science behind this.

<iframe width="770" height="433" src="https://www.youtube.com/embed/BHRcPTS1VHc" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Yet more on why we can't take OOL science seriously

Origin of Life: Top Three Problems with Protocells

The latest video in the Long Story Short series was released this week on YouTube. The video explains how cell membranes in all of life display complexity that cannot be explained by purely natural processes. See my comments from yesterday, “New Animated Video: Cell Membranes by Natural Processes Alone?,” adding some supporting details to the argument. Here’s more.

As we uncover layer after layer of the astounding complexity of even the simplest forms of life, the origin-of-life research community increasingly relies upon their trump card: imaginary protocells that supposedly existed long ago and were dramatically simpler than existing life. As the story goes, modern life may indeed be very complex, but protocells used to be much simpler, and there was plenty of time for the complexity to develop.

Protocells conveniently fill the uncomfortably large gap between the simple molecules that can be produced by prebiotic processes and the staggering complexity of all extant life. But there are three major problems with the concept of protocells. These problems are all backed by strong empirical support, in sharp contrast with the concept of protocells.

A Coddling Environment

First, scientists have been working for decades to simplify existing life, trying to arrive at a minimal viable life form by jettisoning anything that is not essential from the simplest extant cells. The success of Craig Venter’s group is well known. Building on their efforts to produce synthetic life (“Synthia” or “Mycoplasma labritorium”) in 2010,1,2 in 2016 they introduced the current record holder for the simplest autonomously reproducing cell (JVCI Syn3.0).3 With a genome of only 473 genes and 520,000 base pairs of DNA, JVCI Syn3.0 can reproduce autonomously, but it certainly isn’t robust. Keeping it alive requires a coddling environment — essentially a life-support system. To arrive at a slightly more stable and robust organism that reproduced faster, the team later added back 19 genes to arrive at JVCI Syn3A.4 When combined, this work provides an approximate boundary for the simplest possible self-replicating life. We are clearly approaching the limit of viable cell simplicity. It seems safe to conclude that at least 400 genes (and approximately 500,000 base pairs of DNA) are the minimum requirements to produce a self-replicating cell.

Exporting to the Environment

Second, we know that the process of simplifying an existing cell by removing some of its functionality doesn’t actually simplify the overall problem — it only exports the required complexity to the environment. A complex, robust cell can survive in changing conditions with varying food sources. A simplified cell becomes dependent on the environment to provide a constant, precise stream of the required nutrients. In other words, the simplified cell has reduced ability to maintain homeostasis, so the cell can only remain alive if the environment takes on the responsibility for homeostasis. Referring to JVCI Syn3A, Thornberg et al. conclude, “Unlike most organisms, which have synthesis pathways for most of [their] building blocks, Syn3A has been reduced to the point where it relies on having to transport them in.”5 This implies that the environment must provide a continuous supply of more specific and complex nutrients. The only energy source that JVCI Syn3A can process is glucose,4 so the environment must provide a continuous supply of its only tolerable food. Intelligent humans can provide such a coddling life-support environment, but a prebiotic Earth could not. Protocells would therefore place untenable requirements on their environment, and the requirements would have to be consistently met for millions of years.

Striving for Simplicity

Third, we know that existing microbes are constantly trying to simplify themselves, to the extent that their environment will allow. In Richard Lenski’s famous E. coli experiment, the bacteria simplified themselves by jettisoning their ribose operons after a few thousand generations, because they didn’t need to metabolize ribose and they could replicate 2 percent faster without it, providing a selective advantage.6 Furthermore, Kuo and Ochman studied the well-established preference of prokaryotes to minimize their own DNA, concluding: “deletions outweigh insertions by at least a factor of 10 in most prokaryotes.”7 This means that existing life has been trying from the very start to be as simple as possible. Therefore, it is likely that extant life has already reached something close to the simplest possible form, unless experimenters like Lenski provide a coddling environment for a long duration that allows further simplification. But such an environment requires the intervention of intelligent humans to provide just the right ingredients, at the right concentrations, and at the right time. No prebiotic environment could do this. Therefore, scientists need not try to simplify existing life — we already have good approximations of the simplest form. Indeed, Mycoplasma genitalium has a genome of 580,000 base pairs and 468 genes8 whereas Craig Venter’s minimal “synthetic cell” JVCI Syn3.0 has a comparable genome of 520,000 base pairs and 473 genes.3

The data provide a clear picture: the surprising complexity of even the simplest forms of existing life — 500,00 base pairs of DNA — cannot be avoided and cannot be reduced unless intelligent agents provide a complex life-support environment. Because protocells would have had to survive and reproduce on a harsh and otherwise lifeless planet, protocells are not a viable concept. Protocells place origin-of-life researchers in a rather awkward position: relying upon an imaginary entity to sustain their belief that only matter and energy exist.

References

- Gibson DG et al. Creation of a bacterial cell controlled by a chemically synthesized genome. Science 2010; 329:52–56.

- Gibson DG et al. Synthetic Mycoplasma mycoides JCVI-syn1.0 clone sMmYCp235-1, complete sequence. 2010. NCBI Nucleotide. Identifier: CP002027.1.

- Hutchison CA III et al. Design and synthesis of a minimal bacterial genome. Science. 2016; 351: 1414.

- Breuer et al. eLife 2019; 8:e36842. DOI: https://doi.org/10.7554/eLife.36842.

- Thornburg ZR et al. Fundamental behaviors emerge from simulations of a living minimal cell. Cell 2022; 185: 345-360.

- Cooper VS et al. Mechanisms causing rapid and parallel loss of ribose catabolism in evolving populations of Escherichia coli B. J Bacteriology 2001, 2834-2841.

- Kuo, CH and Ochman H. Deletional bias across the three domains of life. Genome. Biol. Evol. 1:145–152.

- Fraser CM et al. The minimal gene complement of Mycoplasma genitalium. Science. 1995; 270; 397-403.

Friday, 1 April 2022

The ministry of truth is a thing?

<iframe width="853" height="480" src="https://www.youtube.com/embed/1z2l3HLeKwc" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Byzantium:A brief history.

Byzantium (/bɪˈzæntiəm, -ʃəm/) or Byzantion (Greek: Βυζάντιον) was an ancient Greek city in classical antiquity that became known as Constantinople in late antiquity and Istanbul today. The Greek name Byzantion and its Latinization Byzantium continued to be used as a name of Constantinople sporadically and to varying degrees during the thousand year existence of the Byzantine Empire.[1][2] Byzantium was colonized by Greeks from Megara in the 7th century BC and remained primarily Greek-speaking until its conquest by the Ottoman Empire in AD 1453.[3]

Etymology

The etymology of Byzantium is unknown. It has been suggested that the name is of Thracian origin.[4] It may be derived from the Thracian personal name Byzas which means "he-goat".[5][6] Ancient Greek legend refers to the Greek king Byzas, the leader of the Megarian colonists and founder of the city.[7] The name Lygos for the city, which likely corresponds to an earlier Thracian settlement,[4] is mentioned by Pliny the Elder in his Natural History.[8]

Byzántios, plural Byzántioi (Ancient Greek: Βυζάντιος, Βυζάντιοι, Latin: Byzantius; adjective the same) referred to Byzantion's inhabitants, also used as an ethnonym for the people of the city and as a family name.[5] In the Middle Ages, Byzántion was also a synecdoche for the eastern Roman Empire. (An ellipsis of Medieval Greek: Βυζάντιον κράτος, romanized: Byzántion krátos).[5] Byzantinós (Medieval Greek: Βυζαντινός, Latin: Byzantinus) denoted an inhabitant of the empire.[5] The Anglicization of Latin Byzantinus yielded "Byzantine", with 15th and 16th century forms including Byzantin, Bizantin(e), Bezantin(e), and Bysantin as well as Byzantian and Bizantian.[9]

The name Byzantius and Byzantinus were applied from the 9th century to gold Byzantine coinage, reflected in the French besant (d'or), Italian bisante, and English besant, byzant, or bezant.[5] The English usage, derived from Old French besan (pl. besanz), and relating to the coin, dates from the 12th century.[10]

Later, the name Byzantium became common in the West to refer to the Eastern Roman Empire, whose capital was Constantinople. As a term for the east Roman state as a whole, Byzantium was introduced by the historian Hieronymus Wolf only in 1555, a century after the last remnants of the empire, whose inhabitants continued to refer to their polity as the Roman Empire (Medieval Greek: Βασιλεία τῶν Ῥωμαίων, romanized: Basileía tōn Rhōmaíōn, lit. 'empire of the Romans'), had ceased to exist.[11]

Other places were historically known as Byzántion (Βυζάντιον) – a city in Libya mentioned by Stephanus of Byzantium and another on the western coast of India referred to by the Periplus of the Erythraean Sea; in both cases the names were probably adaptations of names in local languages.[5] Faustus of Byzantium was from a city of that name in Cilicia.[5]

History

The origins of Byzantium are shrouded in legend. Tradition says that Byzas of Megara (a city-state near Athens) founded the city when he sailed northeast across the Aegean Sea. The date is usually given as 667 BC on the authority of Herodotus, who states the city was founded 17 years after Chalcedon. Eusebius, who wrote almost 800 years later, dates the founding of Chalcedon to 685/4 BC, but he also dates the founding of Byzantium to 656 BC (or a few years earlier depending on the edition). Herodotus' dating was later favored by Constantine the Great, who celebrated Byzantium's 1000th anniversary between the years 333 and 334.[12]

Byzanitium was mainly a trading city due to its location at the Black Sea's only entrance. Byzantium later conquered Chalcedon, across the Bosphorus on the Asiatic side.

The city was taken by the Persian Empire at the time of the Scythian campaign (513 BC) of King Darius I (r. 522–486 BC), and was added to the administrative province of Skudra.[13] Though Achaemenid control of the city was never as stable as compared to other cities in Thrace, it was considered, alongside Sestos, to be one of the foremost Achaemenid ports on the European coast of the Bosphorus and the Hellespont.[13]

Byzantium was besieged by Greek forces during the Peloponnesian War. As part of Sparta's strategy for cutting off grain supplies to Athens during their siege of Athens, Sparta took control of the city in 411 BC, to bring the Athenians into submission. The Athenian military later retook the city in 408 BC, when the Spartans had withdrawn following their settlement.[14]

After siding with Pescennius Niger against the victorious Septimius Severus, the city was besieged by Roman forces and suffered extensive damage in AD 196.[15] Byzantium was rebuilt by Septimius Severus, now emperor, and quickly regained its previous prosperity. It was bound to Perinthus during the period of Septimius Severus.[citation needed] The strategic and highly defensible (due to being surrounded by water on almost all sides) location of Byzantium attracted Roman Emperor Constantine I who, in AD 330, refounded it as an imperial residence inspired by Rome itself, known as Nova Roma. Later the city was called Constantinople (Greek Κωνσταντινούπολις, Konstantinoupolis, "city of Constantine").

This combination of imperialism and location would affect Constantinople's role as the nexus between the continents of Europe and Asia. It was a commercial, cultural, and diplomatic centre and for centuries formed the capital of the Byzantine Empire, which decorated the city with numerous monuments, some still standing today. With its strategic position, Constantinople controlled the major trade routes between Asia and Europe, as well as the passage from the Mediterranean Sea to the Black Sea. On May 29, 1453, the city fell to the Ottoman Turks, and again became the capital of a powerful state, the Ottoman Empire. The Turks called the city "Istanbul" (although it was not officially renamed until 1930); the name derives from "eis-ten-polin" (Greek: "to-the-city"). To this day it remains the largest and most populous city in Turkey, although Ankara is now the national capital.

Emblem

By the late Hellenistic or early Roman period (1st century BC), the star and crescent motif was associated to some degree with Byzantium; even though it became more widely used as the royal emblem of Mithradates VI Eupator (who for a time incorporated the city into his empire).[16]

Some Byzantine coins of the 1st century BC and later show the head of Artemis with bow and quiver, and feature a crescent with what appears to be an eight-rayed star on the reverse. According to accounts which vary in some of the details, in 340 BC the Byzantines and their allies the Athenians were under siege by the troops of Philip of Macedon. On a particularly dark and wet night Philip attempted a surprise attack but was thwarted by the appearance of a bright light in the sky. This light is occasionally described by subsequent interpreters as a meteor, sometimes as the moon, and some accounts also mention the barking of dogs. However, the original accounts mention only a bright light in the sky, without specifying the moon.[a][b] To commemorate the event the Byzantines erected a statue of Hecate lampadephoros (light-bearer or bringer). This story survived in the works of Hesychius of Miletus, who in all probability lived in the time of Justinian I. His works survive only in fragments preserved in Photius and the tenth century lexicographer Suidas. The tale is also related by Stephanus of Byzantium, and Eustathius.

Devotion to Hecate was especially favored by the Byzantines for her aid in having protected them from the incursions of Philip of Macedon. Her symbols were the crescent and star, and the walls of her city were her provenance.[19]

It is unclear precisely how the symbol Hecate/Artemis, one of many goddesses[c] would have been transferred to the city itself, but it seems likely to have been an effect of being credited with the intervention against Philip and the subsequent honors. This was a common process in ancient Greece, as in Athens where the city was named after Athena in honor of such an intervention in time of war.

Cities in the Roman Empire often continued to issue their own coinage. "Of the many themes that were used on local coinage, celestial and astral symbols often appeared, mostly stars or crescent moons."[21] The wide variety of these issues, and the varying explanations for the significance of the star and crescent on Roman coinage precludes their discussion here. It is, however, apparent that by the time of the Romans, coins featuring a star or crescent in some combination were not at all rare.

People

- Homerus, tragedian, lived in the early 3rd century BC

- Philo, engineer, lived c. 280 BC–c. 220 BC

- Epigenes of Byzantium, astrologer, lived in the 3rd–2nd century BC

- Aristophanes of Byzantium, a scholar who flourished in Alexandria, 3rd–2nd century BC

- Myro, a Hellenistic female poet

See also

- Constantinople, which details the history of the city before 1453

- Fall of Constantinople (Turkish conquest of 1453)

- Istanbul, which details the history of the city from 1453 on, and describes the modern city

- Sarayburnu, which is the geographic location of ancient Byzantium

- Timeline of Istanbul history

Notes

- "In 324 Byzantium had a number of operative cults to traditional gods and goddesses tied to its very foundation eight hundred years before. Rhea, called "the mother of the gods" by Zosimus, had a well-ensconced cult in Byzantium from its very foundation. [...] Devotion to Hecate was especially favored by the Byzantines [...] Constantine would also have found Artemis-Selene and Aphrodite along with the banished Apollo Zeuxippus on the Acropolis in the old Greek section of the city. Other gods mentioned in the sources are Athena, Hera, Zeus, Hermes, and Demeter and Kore. Even evidence of Isis and Serapis appears from the Roman era on coins during the reign of Caracalla and from inscriptions." [20]

References

- Molnar, Michael R. (1999). The Star of Bethlehem. Rutgers University Press. p. 48.

Sources

- Balcer, Jack Martin (1990). "BYZANTIUM". In Yarshater, Ehsan (ed.). Encyclopædia Iranica, Volume IV/6: Burial II–Calendars II. London and New York: Routledge & Kegan Paul. pp. 599–600. ISBN 978-0-71009-129-1.

- Harris, Jonathan, Constantinople: Capital of Byzantium (Hambledon/Continuum, London, 2007). ISBN 978-1-84725-179-4

- Jeffreys, Elizabeth and Michael, and Moffatt, Ann, Byzantine Papers: Proceedings of the First Australian Byzantine Studies Conference, Canberra, 17–19 May 1978 (Australian National University, Canberra, 1979).

- Istanbul Historical Information – Istanbul Informative Guide To The City. Retrieved January 6, 2005.

- The Useful Information about Istanbul Archived 2007-03-15 at the Wayback Machine. Retrieved January 6, 2005.

- The Oxford Dictionary of Byzantium (Oxford University Press, 1991) ISBN 0-19-504652-8

- Yeats, William Butler, "Sailing to Byzantium",

Byzantion term remained used for constantinople.