<iframe width="853" height="480" src="https://www.youtube.com/embed/A6Q72R1aYwA" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

the bible,truth,God's kingdom,Jehovah God,New World,Jehovah's Witnesses,God's church,Christianity,apologetics,spirituality.

Saturday 23 April 2022

On Russia's "Cosa Nostra"

Saving junk DNA?

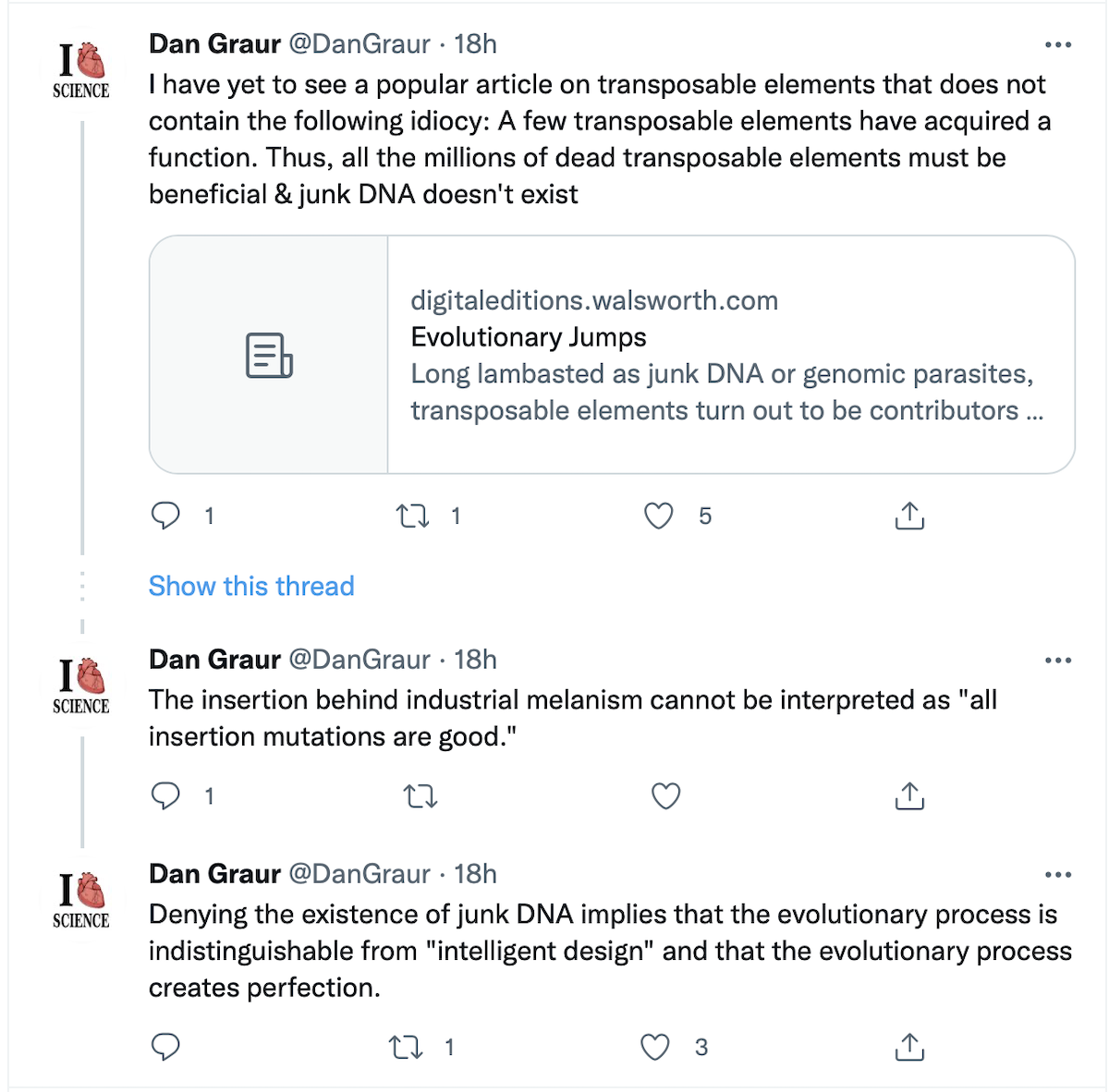

Dan Graur: The Core Rationale for Claiming “Junk DNA”

See the last entry in this Twitter thread (below), and then our follow-up comments under the screen capture. Dan Graur is a University of Houston biologist and prominent junk DNA advocate. The link to the article in The Scientist that he is grumbling about is included at the very end of this post.

So What’s Wrong with Graur’s Argument?

First, it is unlikely that the growing number of biologists looking for possible functions in so-called “junk DNA” are ID proponents (although a few might be).

Consider this syllogism, however:

- Evolutionary processes lack foresight.

- Processes without foresight create novel functions only infrequently, more often causing non-functionality.

- “Junk DNA” appears to be non-functional.

- Therefore, since evolution is true, it is pointless to look for functions in apparently functionless (“junk”) DNA; what appears to lack function, truly does lack function.

Here’s the Problem

Premises (1) and (2) could be true, as doubtless most biologists would agree, yet (3) does not follow from either premise, and refers in any case only to an appearance, exactly the sort of superficial impression calling for further analysis. Moreover, (4) is a counsel of despair — a GENUINE science-stopper. Since “only infrequently” in premise (2) is defined entirely by the sample size, not by evolutionary theory itself, “no function” claims are empirically unsupportable, resting wholly on negative evidence.

Graur’s argument also affirms the consequent, as tendentious arguments often do. Just take a look at premises (2) and (3) and their logical relation.

That explains why many researchers, who are fully on board with evolution, nonetheless ignore Graur’s advice. They decline to kiss the no-function wall, as Paul Nelson put it back in 2010:

Why Kissing the Wall Is the Worst Possible Heuristic for Biological Discovery

In biology, the claim “structure x has no function” can only topple in one direction, namely, towards the discovery of functions. “No function” represents a brick wall of infinite extent, from which one can only fall backwards, into the waiting arms of a function one didn’t see, or overlooked.

Because one was kissing the wall, so to speak.

Here is the article that got under Graur’s skin: “Evolutionary Jumps,” by Christie Wilcox.

On being in the crosshairs of the ministry of truth.

<iframe width="853" height="480" src="https://www.youtube.com/embed/MpnbMIOvbjc" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Coup de etats ,for fun or profit.

<iframe width="784" height="441" src="https://www.youtube.com/embed/xDuMRAhe7Y0" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

And speaking of election meddling.

<iframe width="784" height="441" src="https://www.youtube.com/embed/f9Q19QJpJ4s" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Don't give peace a chance?

<iframe width="784" height="441" src="https://www.youtube.com/embed/4IbOUqWbumE" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Another exposition on engineerless engineering.

Darwinists Seek to Explain the Eye’s Engineering Perfection

Yesterday we looked at a paper by Tom Baden and Dan-Eric Nilsson in Current Biology debunking the old canard that the human eye is a bad design because it is wired backwards. We saw them turn the tables and show that, in terms of performance, the inverted retina is actually as good or better than the everted retina. Vertebrate eyes “come close to perfect,” they said. Ask the eagles with “the most acute vision of any animal,” which would include cephalopods with their allegedly more logical arrangement. Eagles win! Squids lose! Baden and Nilsson looked at eyes from an “engineer’s perspective” and shared good reasons for the inverted arrangement. They even spoke of design seven times; “the inverted retinal design is a blessing,” they argued.

And yet they maintain that eyes evolved by blind, unguided natural processes. How can they believe that? In this follow-up, we look at the strategies they use to maintain the Darwinian narrative despite the evidence.

Personification

First, they turn evolution into an engineer. Personification is a common ploy by Darwinists. Richard Dawkins envisioned a “blind watchmaker” replacing Paley’s artificer. Darwin even gave natural selection a gender:

Natural selection acts only by taking advantage of slight, successive variations. She can never take a great and sudden leap, but must advance by short and sure, though slow steps.

Evolutionists speak freely of natural selection as a “tinkerer” cobbling together whatever odd parts are handy so that a solution, however, awkward, comes to satisfy a need brought on by “selection pressure.” The resulting structures give the illusion of design but are not the work of a rational agent. Neil Thomas calls such stories “agentless acts” by which engineered structures arise by “pure automatism or magical instrumentality quite outside common experience or observability.” Evolutionists need no magician; the rabbit emerges out of the hat spontaneously, as if an invisible hand pulled on its ears. Watch how Baden and Nilsson personify evolution and turn it into a Blind Tweaker and Opportunist:

So, in general, the apparent challenges with an inverted retina seem to have been practically abolished by persistent evolutionary tweaking. In addition, opportunities that come with the inverted retina have been efficiently seized. In terms of performance, vertebrate eyes come close to perfect. [Emphasis added.]

Visualization

A second tactic Baden and Nilsson use is visualization. Figure 3 in their article shows three stages of a possible evolutionary path from a primitive photoreceptor to a “high quality spatial vision” with “usable space” between the lens and retina. “This space could be usefully filled by the addition of neurons that locally pre-process the image picked up by the photoreceptors,” the caption reads. Well, then, what personified tinkerer would not take advantage of such prime real estate? Give the Blind Tweaker room to homestead and you will shortly find him (or her) setting up shop. What’s missing in the visuals are giant leaps over engineered systems in the gaps, and an account of how they all become coordinated to make vision work.

Inevitability

Another tactic used by Baden and Nilsson is the notion of inevitability. Evolution was forced to take the path it did. Evolution had no choice, they imply, because the first time a layer of light-sensitive cells began to invaginate into a cup shape, the path forward was set in concrete.

The specific reason for our own retinal orientation is the way the nervous system was internalised by invagination of the dorsal epidermis into a neural tube in our pre-chordate ancestors. The epithelial orientation in the neural tube is truly inside out. The vertebrate eye cup develops from a frontal (brain) part of the neural tube, where the receptive parts of any sensory cells naturally project inwards into the lumen of the neural tube (the original outside). In contrast, the eyes of octopus and other cephalopods develop from cups formed in the skin, and the original epithelial outside keeps its orientation.

This tactic gives a Darwinist the flexibility to use the same theory to explain opposite things. Evolution is so rigid it must follow the path the ancestor took, but so malleable that all subsequent engineering can be optimized to perfection. Surely, though, if natural selection has the magical powers the Darwinists ascribe to it, it could have ditched ancestral traditions and remodeled the eye cup. Isn’t that how all innovations begin in the theory?

Airy Nothings

The sneakiest tactic used by the authors is what Neil Thomas described as “notional terms.” These are “airy nothings” and “empty signifiers” that gloss over difficulties by replacing evidence with factoids. A factoid, Thomas says, is “a contention without empirical foundation or any locatable referent in the tangible world but one nevertheless held to be true by the person who proposes it” (italics in original). To use this tactic, just assume that your notion is true. Complex things evolved. They originated. They emerged. They arose. They started. They came. They developed. They improved.

Bipolar cells emerged, slotting in between the cones and the ganglion cells to provide a second synaptic layer right in the sensory periphery. Further finesse came through the addition of horizontal and amacrine cells. The result is a structurally highly stereotyped sheet of neuronal tissue present in all extant vertebrates….

Notional terms like this, that assume what need to be proved, can slip through unnoticed unless one is on guard for them. The following contains two of them, while distracting readers with the ID-friendly argument that the eye is not flawed.

Arguments for a basically flawed orientation of the vertebrate retina are built around an eye that we encounter in a grossly enlarged state compared to its humbler origins. We are now more than 500 million years down the road from where vertebrate vision started in the early Cambrian.

When the readers weren’t paying attention, they were being told that the eye had humble origins in the early Cambrian. A truer statement would have read, “Arguments for a basically flawed orientation of the vertebrate retina are built around the assumption of Darwinian evolution.”

Consensus

Baden and Nilsson might counter that they don’t need to give any details about how evolution achieved engineering perfection, because the scientific community has already reached a consensus that Darwinian evolution explains everything in biology, so they can just take it for granted. This is a form of argument from authority, but worse in this instance. As shown in the previous post, the strongest case for eye evolution — so strong that Richard Dawkins celebrated it publicly — was the 1994 graphic that Jonathan Wells and David Berlinski exposed, and Nilsson was one of the perpetrators! To take that for granted would be like drawing a picture of a unicorn in 1994 and then using that drawing as evidence for unicorns in 2022. Evolutionists leaped onto that 1994 article because nothing better had shown up since Darwin got cold shudders considering this “organ of extreme perfection,” the eye.

The Power of Master Narratives

A doctorate in a relatively rare field of expertise called the Rhetoric of Science is held by Thomas Woodward, author of Doubts About Darwin (2003). In that book he examines the role of “fantasy themes” (which he prefers to call “projection themes”) in the history of the Darwin versus Design debate. One of these is the “progress narrative” that the universe is on a continuous path toward higher complexity (p. 52). These projection themes — not empirical data — were of paramount importance in the wide acceptance of evolution when the Victorian atmosphere was fragrant with feelings of progress. Darwin and his successors had a simple, compelling story that was part “factual-empirical narrative” and part “semi-imaginative narrative” (p. 22).

To understand how Darwinians continue to maintain what appears to ID advocates as cognitive dissonance, i.e., that nature is exquisitely engineered but emerged by blind processes, observers need to be cognizant of the projection themes and rhetorical tactics Darwinians use to forestall a Design Revolution. The ones used by Baden and Nilsson cannot be conquered merely by appeals to empirical facts. They need to be exposed as the ploys they are and supplanted by better narratives founded on stronger evidence and logic.

Charles Darwin the design advocate?

Charles Darwin’s “Intelligent Design”

I wrote here yesterday about Charles Darwin’s orchid book. Shortly after its publication, reviews of the book began appearing in the British press. Unlike with the Origin, the reviews were overwhelmingly positive. Reviewers were extremely impressed with Darwin’s detailed documentation of the variety of contrivances in orchids. But much to Darwin’s dismay, they did not see this as evidence of natural selection.

An anonymous reviewer in the Annals and Magazine of Natural History wrote in response to Darwin’s contention that nature abhors perpetual self-fertilization:

Apart from this theory and that of ‘natural selection,’ which we cannot think is much advanced by the present volume, we must welcome this work of Mr. Darwin’s as a most important and interesting addition to botanical literature.

Other reviewers went much further. M. J. Berkeley, writing in the London Review, said:

…the whole series of the Bridgewater Treatises will not afford so striking a set of arguments in favour of natural theology as those which he has here displayed.

Marvels of Divine Handiwork

A review by R. Vaughn in the British Quarterly Review opined:

No one acquainted with even the very rudiments of botany will have any difficulty in understanding the book before us, and no one without such acquaintance need hesitate to commence the study of it. For, in the first place, it is full of the marvels of Divine handiwork.

According to the Saturday Review:

By contrivances so wonderful and manifold, that, after reading Mr. Darwin’s enumeration of them, we felt a certain awe steal over the mind, as in the presence of a new revelation of the mysteriousness of creation.

“New and Marvelous Instances of Design”

Even Darwin’s pigeon-fancier friend, William Tegetmeier, noted the existence in the book of “new and marvelous instances of design.” And an anonymous reviewer in the British and Foreign Medico-Chirurgical Review wrote:

To those whose delight it is to dwell upon the manifold instances of intelligent design which everywhere surround us, this book will be a rich storehouse.

Darwin’s “flank movement on the enemy” failed miserably. Unable to make a convincing case for natural selection in his broader species work, he tried instead to stealthily impress the scientific world by appeal to the exquisite variety of fertilization methods among orchids. Darwin impressed the scientific world alright. He showed how difficult it is to understand the variety of living organisms without appeal to design.

His orchid book may well be the most important of all Darwin’s publications. It made a unique contribution to 19th-century natural history — or is that natural theology? I can think of no greater irony than the fact that Charles Darwin, who Richard Dawkins felt made it possible to be an intellectually fulfilled atheist, actually bequeathed to 19th-century natural historians one of the most impressive cases for intelligent design ever made.

JEHOVAH may have known what he was doing after all?

Evolutionists: The Eye Is “Close to Perfect”

Two evolutionists have made a stunning admission: the human eye is not oriented backwards. This collapses a long-standing argument for poor design in the eye. There are good reasons why vertebrate eyes have backward-pointing retinas, they say. In fact, human eyes may outperform the eyes of cephalopods, which have been held up as a smarter example of engineering design.

Our analysis of this major rethink will be divided into two parts. First, we will see why the design of the human eye, with its backward-pointing retina, makes sense. Second, we will see how the authors try to rescue Darwinism from this major rethink. Of special interest to this story is that one of the authors, Dan-Eric Nilsson, made a splash with Suzanne Pelger in 1994 with a graphic of eye evolution showing how a light-sensitive spot could evolve into a vertebrate eye in stages. Richard Dawkins made hay with that story. The episode required a lot of fact-checking to refute, as Jonathan Wells and David Berlinski remember.

Terms and Facts

A couple of terms and facts should be enunciated. Cephalopods (octopuses, squid, and cuttlefish) have everted retinas, with the photoreceptor cells pointing toward the light source. Vertebrates all have inverted retinas, with the photoreceptors pointed away from the light source. Some other invertebrates have either one arrangement or the other. Some animals, like zebrafish larvae, have no vitreous space between the lens and the retina. Humans exemplify most vertebrate arrangements with a fluid-filled vitreous humor between the lens and retina.

The new article by Tom Baden and Dan-Eric Nilsson appeared this month in Current Biology, under the title, “Is Our Retina Really Upside Down?” They do not argue that one form is better than the other, but rather, because of trade-offs owing to size, habitat, and behavior, what works for one animal may not be optimal for another.

But in general, it is not possible to say that either retinal orientation is superior to the other. It is the notions of right way or wrong way that fails. Our retina is not upside down, unless perhaps when we stand on our head.

Criticisms of the “Backward Retina” Disappear

Knowing that many of their evolutionary colleagues have criticized the inverted retina as a bad design, Baden and Nilsson elaborate on the criticism then state their thesis:

From an engineer’s perspective, these problems could be trivially averted if the retina were the other way round, with photoreceptors facing towards the centre of the eye. Accordingly, the human retina appears to be upside down. However, here we argue that things are perhaps not quite so black and white. Ranging from evolutionary history via neuronal economy to behaviour, there are in fact plenty of reasons why an inverted retinal design might be considered advantageous. [Emphasis added.]

For the remainder of the article, with 28 references, they consider advantages of the inverted retina, which humans share with all other vertebrates, and the everted retina shared by most invertebrates, with ample evolutionary storytelling thrown in. Here are specific criticisms of the inverted retina with their responses.

Blind spot. The inverted retina needs a place for bundling the nerves from the photoreceptors into a hole so they can join in the optic nerve to the brain. This so-called blind spot is “not all that bad,” they point out; it only occupies 1% of the visual field in humans and is filled in with data from the other eye. “Moreover, body movements can ensure suitable sampling of visual scenes despite this nuisance,” they say. “After all, when is the last time you have felt inconvenienced by your own blind spots?”

Optically compromised space. Surely the tangles of neural cells in front of the photoreceptors reduce optical quality, don’t they? Not really, say Baden and Nilsson, for several reasons:

Looking out through a layer of neural tissue may seem to be a serious drawback for vertebrate vision. Yet, vertebrates include birds of prey with the most acute vision of any animal, and even in general, vertebrate visual acuity is typically limited by the physics of light, and not by retinal imperfections. Likewise, photoreceptor cell bodies, which in vertebrate eyes are also in the way of the retinal image, do not seem to strongly limit visual acuity. Instead, in several lineages, which include species of fish, reptiles and birds, these cell bodies contain oil droplets that improve colour vision and/or clumps of mitochondria that not only provide energy but also help focus the light onto the photoreceptor outer segments.

They don’t specifically mention the Mueller cells that act as waveguides to the photoreceptors, but surely those are among the “surprising” ways that the “design challenges have been met” in the inverted retina.

Benefits of Inverted Retinas

The inverted retina also provides distinct advantages:

Preprocessing. One thing the everted retina cannot do as well is process the information before it gets to the brain. Baden and Nilsson spend some time discussing why this is so very beneficial.

To fully understand the merits of the inverted design, we need to consider how visual information is best processed. The highly correlated structure of natural light means that the vast majority of light patterns sampled by eyes are redundant. Using retinal processing, vertebrate eyes manage to discard much of this redundancy, which greatly reduces the amount of information that needs to be transmitted to the brain.This saves colossal amounts of energy and keeps the thickness of the optic nerve in check, which in turn aids eye movements.

For example, they say, a blue sky consists of mostly redundant information. The vertebrate eye “truly excels” at concentrating on new and unexpected information, like the shadow of a bird flying against the sky. Neurons that monitor portions of the visual field that have not changed can stay inactive, saving energy. What’s more, the vertebrate eye contains layers of specialized cells that preprocess the information sent to the brain, giving the eye “predictive coding” as reported elsewhere. They touch on that fact here:

The extensive local circuitry within the eye — enabled by two thick and densely interconnected synaptic layers, achieves an amazingly efficient, parallel representation of the visual scene. By the time the signal gets to the ganglion cells that form the optic nerve, spikes are mostly driven by the presence of the unexpected.

Useful space. The inverted retina uses to good advantage the vitreous space for placing the “extensive local circuitry” for the preprocessing cells. Squid eyes, with the photoreceptors smack against the optic lobe, lack that benefit. Here, Baden and Nilsson turn the tables on the “bad design” critics so eloquently one must read it in their own words:

Returning to our central narrative, the intraocular space of vertebrate eyes is an ideal location for such early processing, hinting that the vertebrate retina is in fact cleverly oriented the right way! … Taking larval zebrafish as the best studied example, the somata and axons of their ganglion cells are squished up right against the lens, while on the other end the outer segment of photoreceptors sits neatly inserted into the pigment epithelium that lines the eyeball. Clearly, in these smallest of perfectly functional vertebrate eyes, the inverted retina has allowed efficient use of every cubic micron of intraocular real estate. In contrast, now it is suddenly the cephalopod retina that appears to have an awkward orientation. … For tiny eyes, the everted design wastes extremely valuable space inside the eye whereas the inverted retinal design is a blessing. With this reasoning, cephalopods have an unfortunate retinal orientation and, contrary to the general notion, it is the vertebrate retina that is the right way up.

“Close to Perfect”

As a capstone to their argument, they conclude that “In terms of performance, vertebrate eyes come close to perfect.” This does not mean the poor octopus is the loser in this surprising upset. If scientists knew more about cephalopod eye performance within the animals’ own circumstances, a similar conclusion would probably be justified. “Both the inverted and the everted principles of retinal design have their advantages and their challenges, or shall we say ‘opportunities’.”

Surely with all this engineering and design talk, the authors are

ready to jump the Darwin ship and join the ID community, right? One must

never underestimate the stubbornness and storytelling ability of

evolutionists. Next time we will look at how they explain all this

engineering perfection in Darwinian terms.

Loaded much?

A Thought Experiment

I’d like to propose a thought experiment for you. I will ask three questions and offer three possible answers for each. I would like you, the reader, to consider the answers as objectively as you can, and pick the answer that is correct. It shouldn’t be too hard, right?

Ah, but if your worldview is at stake, what then?

1. A doctor, having found out that I did intelligent design research, asked me if my research indicated something could evolve that I had thought could not, would I publish it?

A. Of course.

B. No.

C. Keep trying until I get the answer I want.

2. A doctor, having found out that I did evolutionary biology research, asked me if my research indicated something could not evolve that I had thought could, would I publish it?

A. Of course.

B. No.

C. Keep trying until I get the answer I want.

Question 1 happened to me. I answered, “Of course.” This is because I am a scientist first, and an intelligent design advocate second. To even ask the question impugned my integrity as a scientist. That was the point of the question, I suppose.

The same doctor would not ask question 2 of an evolutionary biologist. He was already sure evolution was true, so the question would be meaningless for him.

Final question.

3. Why should anyone think it is OK to question the integrity of a scientist (or anyone) because of her views on intelligent design or evolution?

A. Because they are JUST WRONG. And they ought to know it.

B. Because they are just protecting their turf.

C. It’s not OK.

Well?

Thursday 14 April 2022

Another revolution eating her children?

<iframe width="853" height="480" src="https://www.youtube.com/embed/AHR15JxckZg" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Shell companies: An exposition.

<iframe width="853" height="480" src="https://www.youtube.com/embed/gZSaaVPgqAs" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

London: Financial laundromat of the world?

<iframe width="853" height="480" src="https://www.youtube.com/embed/ySyx-7CZJfg" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

James R. Lewis in his own words.

<iframe width="853" height="480" src="https://www.youtube.com/embed/K-uBeGbvVrQ" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Ibn Battuta: A brief history.

<iframe width="853" height="480" src="https://www.youtube.com/embed/WlQdLLOmW3o" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Pre darwinian complexity and the design debate.

Existential Implications of the Miller-Urey Experiment

Editor’s note: We have been delighted to present a series by Neil Thomas, Reader Emeritus at the University of Durham, “Why Words Matter: Sense and Nonsense in Science.” This is the seventh and final article in the series. Find the full series so far here. Professor Thomas’s recent book is Taking Leave of Darwin: A Longtime Agnostic Discovers the Case for Design (Discovery Institute Press).

As I have suggested already in this series, there undoubtedly was much at stake in the Miller-Urey experiment — considerably more than was realized at the time by those who listened uncritically to Carl Sagan and others with an interest in deceptively boosting the supposed importance of the experiment. Its implicit promise for many observers as well as eager readers of the American and world press would have been that it would extend Darwin’s timeline back to the pre-organic formation of the first living cell, and so establish the fundamental point of departure for the mechanism of natural selection to go to work on. It would also of course have delivered a stunning victory for the materialist position. In the event, though, it succeeded only in dealing a disabling body-blow to materialist notions by giving game set and match to the theistic position. This point has not, to my knowledge, been publicly acknowledged.

Hot Springs, Hydrothermal Vents, Etc.

Most devastatingly for Darwinists, the complete failure of this and more recent experiments to find the origins of primitive life forms in hot springs, hydrothermal vents in the ocean floor, et al., have removed the indispensable foundation for the operation of natural selection. By that I mean that any postulated selective mechanism must obviously have something to select. No raw material means no evolution, no nothing. Without an “abiogenetic moment” Darwin’s entire theory of evolution via natural selection falls flat.

As matters stand, the bare emergence of living cells remains an unsolved mystery, let alone the claimed corollary of that mysterious and unexplained cellular “complexification” (yet another word without any demonstrable referent, it may be noted) said to follow from it and to have occasioned the fabled development from microbes to (wo)man. The most significant finding of Miller and Urey appears to have been a categorical disproof of Darwinian ideas and a presumptive indication of a supra-natural etiology for the cellular system — an inference to theistic creation/evolution which was of course the very obverse of the result they were seeking.

Nothing Can Come from Nothing

To sum up: there is perhaps limited value in trying to rank in order of gravity the many objections to Darwinism which have been thrown up over the last 160 years. If pressed to do a sort of countdown to number one, however, I would have to say that this particular objection should rank very high up. This is because to attempt to discuss the subject of how the process of selection by nature began to operate whilst not even broaching the question of how nature itself arose in the first place must count as a major evasion.

It is in fact such a glaring logical elision that it can only be viewed (in plain English) as a cop-out. Nothing can come of nothing, goes the old tag, and without knowledge of or at the very least a credible theory concerning the provenance of organic material, the theory of “natural selection” lacks any coherent foundation even for the starting point of its putative operations. Evolutionary biology finds itself in the unenviably anomalous position of being based on an illusory premise without any discernible foundation. Yet the urge to find proof for “natural selection” endures. That, I guess, is a powerful reminder that words, even meaningless words, have the power to create their own virtual realities in our minds, with no relation to any definable referent in the world we inhabit.

Wednesday 13 April 2022

Censorship is for the greater good?

<iframe width="853" height="480" src="https://www.youtube.com/embed/DMGL_zqvnTo" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

My problem with Postmillenialism.

Daniel2:35KJV"Then was the iron, the clay, the brass, the silver, and the gold, broken to pieces together, and became like the chaff of the summer threshingfloors; and the wind carried them away, that no place was found for them: and the stone that smote the image became a great mountain, and filled the whole earth."

Daniel2:44KJV"And in the days of these kings shall the God of heaven set up a kingdom, which shall never be destroyed: and the kingdom shall not be left to other people, but it shall break in pieces and consume all these kingdoms, and it shall stand for ever."

Revelation20:11KJV"And I saw a great white throne, and him that sat on it, from whose face the earth and the heaven fled away; and there was found no place for them."

Note please that the Rule of JEHOVAH'S kingdom over this earth takes place after the destruction of the present human kingdoms there is to be no millennium of parallel rule between JEHOVAH'S kingdom and Satan's empire.

Revelation20:6KJV"Blessed and holy is he that hath part in the first resurrection: on such the second death hath no power, but they shall be priests of God and of Christ, and shall reign with him a thousand years."

1Corinthians15:23KJV"But every man in his own order: Christ the firstfruits; afterward they that are Christ's at his coming(Parousia)."

John6:39KJV"And this is the Father's will which hath sent me, that of all which he hath given me I should lose nothing, but should raise it up again at the last day."

Note that the millenium Follows the first resurrection which follows the beginning of Christ parousia Paul makes it clear that the present age is not the time for Christians to seek any dominion over the earth or any part thereof."Now ye are full, now ye are rich, ye have reigned as kings without us: and I would to God ye did reign, that we also might reign with you."

Hebrews2:8KJV"Thou hast put all things in subjection under his feet. For in that he put all in subjection under him, he left nothing that is not put under him. But now we see NOT YET all things put under him."

Evidently Paul did not think that Christ millenial reign had begun.

Why the Origin Of Life remains darwinism's unicorn. III

Frankenstein and His Offspring

Editor’s note: We are delighted to present a series by Neil Thomas, Reader Emeritus at the University of Durham, “Why Words Matter: Sense and Nonsense in Science.” This is the sixth article in the series. Find the full series so far here. Professor Thomas’s recent book is Taking Leave of Darwin: A Longtime Agnostic Discovers the Case for Design (Discovery Institute Press).

I have been writing about the neologism “abiogenesis” (see earlier posts here and here). Like “panspermia,”1 it is but one example of an old concept (it was first mooted by Svante Arrhenius in 1903) which periodically undergoes a curious form of (intellectual) cryogenic freezing only to reappear after a decent lapse of time and memory to be presented afresh under a revamped name2 as an idea claimed to be worth a second look.

In essence it seems to draw its strength from pseudo-scientific folk-beliefs that life could somehow be made to emerge from non-life, a conception most notably exploited (and obliquely criticized) in Mary Shelley’s Frankenstein (1818). In another example of the literary/media intelligentsia being ahead of the curve, the refusal of the discredited spontaneous generation to give up the ghost gave that anarchic auteur Mel Brooks ample raw material to ridicule the atavistic misconception in his inspired 1974 comic movie, Young Frankenstein.

Two American Scientists

For those who did not catch this laugh-out-loud film: the engaging anti-hero, played by the inimitable Gene Wilder, scion of the notorious Baron Frankenstein, at first does everything possible to put distance between himself and his notorious ancestor, whom he memorably dismisses before a class of his students as a “kook,” and thereafter insists on his surname being pronounced Frankensteen. However, the temptation to attempt the impossible “one last time” proves too much either for “Dr. Frankensteen” (whom the film shows reverting to type when he latterly de-Americanizes his surname to Frankenstein) or, it appears, for two American scientists, Stanley Miller and Harold Urey, to resist.

Most of us probably remember Brooks’s oeuvre as being of a somewhat variable standards, but in amongst the pure goofery of Young Frankenstein, as Brooks himself put it in an interview, the film contained an unmistakably satirical thrust because “the doctor (Wilder) is undertaking the quest to defeat death — to challenge God.”3 That is a not inappropriate epitaph for the Miller-Urey experiment as well as its later avatars, it might be thought.

Next, the final post in this series, “Existential Implications of the Miller-Urey Experiment.”

Notes

- The (unfounded) notion that life was “seeded” on Earth after having been wafted to here from distant planets — a much-derided notion but one which still re-emerges from time to time as a somewhat desperate kite-flying exercise on the part of some scientists who, now as ever, remain at a loss to account for the emergence of animal and human life on earth.

- Aristotle had termed it spontaneous generation.

- Cited by Patrick McGilligan in his biography, Funny Man: Mel Brooks (New York: Harper Collins, 2019), p. 355.

Why the Origin Of Life remains darwinism's unicorn II

Imagining “Abiogenesis”: Crick, Watson, and Franklin

Editor’s note: We are delighted to present a series by Neil Thomas, Reader Emeritus at the University of Durham, “Why Words Matter: Sense and Nonsense in Science.” This is the fifth article in the series. Find the full series so far here. Professor Thomas’s recent book is Taking Leave of Darwin: A Longtime Agnostic Discovers the Case for Design (Discovery Institute Press).

I wrote here yesterday about the Miller-Urey experiment at the University of Chicago in 1953 as an effort to investigate the possibility of spontaneous generation. To be fair to both distinguished collaborators, Stanley Miller and Harold Urey, this was no desperate shot in the dark to bolster materialist thinking. They had clearly done all the requisite preparation for their task. Miller and Urey (a later recipient of the Nobel Prize) theorized that if the conditions prevailing on primeval Earth were reproduced in laboratory conditions, such conditions might prove conducive to a chemical synthesis of living material.

To Produce Life

To abbreviate a long, more complex short, they caused an electric spark to pass through a mixture of methane, hydrogen, ammonia, and water to simulate the kind of energy which might have come from thunderstorms on the ancient Earth. The resulting liquid turned out to contain amino acids which, though not living molecules themselves, are the building blocks of proteins, essential to the construction of life.1 However, the complete chemical pathway hoped for by many was not to materialize. In fact, the unlikelihood of such a materialization was underscored in the very same year that the Miller-Urey experiment took place when Francis Crick, James Watson, and Rosalind Franklin succeeded in identifying the famous double helix of DNA. Their discovery revealed, amongst other things, that even if amino acids could somehow be induced to form proteins, this would still not be enough to produce life.

Despite over-optimistic press hype in the 1950s, which came to include inter alia fulsome eulogizing by Carl Sagan, it has in more recent decades been all but conceded that life is unlikely to form at random from the so-called “prebiotic” substrate on which scientists had previously pinned so much hope. To be sure, there are some biologists, such as Richard Dawkins, who still pin their faith in ideas which have resulted only in blankly negative experimental results.2 Some notions, it appears, will never completely die for some, despite having been put to the scientific sword on numerous occasions — as long of course as they hold out the promise of a strictly materialist explanation of reality.

Next, “Frankenstein and His Offspring.”

Notes

- Inside human cells, coded messages in the DNA are translated by RNA into working molecules of protein, which is responsible for life’s functions.

- “Organic molecules, some of them of the same general type as are normally only found in living things, have spontaneously assembled themselves in these flasks. Neither DNA nor RNA has appeared, but the building blocks of these large molecules, called purines and pyrimidines, have. So have the building blocks of proteins, amino acids. The missing link for this class of theories is still the origin of replication. The building blocks haven’t come together to form a self-replicating chain like RNA. Maybe one day they will.” (Richard Dawkins, The Blind Watchmaker, London: Penguin, 1986, p. 43)

Why the Origin of Life remains darwinism's unicorn.

Considering “Abiogenesis,” an Imaginary Term in Science

Editor’s note: We are delighted to present a series by Neil Thomas, Reader Emeritus at the University of Durham, “Why Words Matter: Sense and Nonsense in Science.” This is the fourth article in the series. Find the full series so far here. Professor Thomas’s recent book is Taking Leave of Darwin: A Longtime Agnostic Discovers the Case for Design (Discovery Institute Press).

Words are cheap and, in science as in other contexts, they can be used to cover up and camouflage a multitude of areas of ignorance. In this series so far, I have dealt summarily with several such terms, since I anticipated that they are already familiar to readers, and as I did not wish to belabor my fundamental point.

“Just Words”

I would, however, like to discuss in somewhat more detail a term which is well enough known but whose manifold implications may not even now, it appears to me, have been appreciated to their full extent. This is the historically recent neologism “abiogenesis” — meaning spontaneous generation of life from a combination of unknown chemical substances held to provide a quasi-magical bridge from chemistry to biology. This term, when subjected to strict logical parsing, I will argue, undermines the very notion of what is commonly understood by Darwinian evolution since it represents a purely notional, imaginary term which might also (in my judgment) be usefully relegated to the category of “just words.”

The greatest problem for the acceptance of Darwinism as a self-standing and logically coherent theory is the unsolved mystery of the absolute origin of life on earth, a subject which Charles Darwin tried to bat away as, if not a total irrelevance, then as something beyond his competence to pronounce on. Even today Darwinian supporters will downplay the subject of the origins of life as a matter extraneous to the subject of natural selection. It is not. It is absolutely foundational to the integrity of natural selection as a conceptually satisfactory theory, and evolutionary science cannot logically even approach the starting blocks of its conjectures without cracking this unsolved problem, as the late 19th-century German scientist Ludwig Buechner pointed out.1

Chicago 1953: Miller and Urey

Darwin famously put forward in a letter the speculation of life having been spontaneously generated in a small warm pool, but did he not follow up on the hunch experimentally. This challenge was left to Stanley Miller and Harold Urey, two much later intellectual legatees in the middle of the 20th century who, in defiance of previous expert opinion, staged an unusual experiment. The remote hinterland of this experiment was as follows. In the 17th century, medical pioneer Sir William Harvey and Italian scientist Francesco Redi both proved the untenability of spontaneous generation: only life can produce life, a finding later to be upheld by French scientist Louis Pasteur in the latter half of the 19th century; but the two Americans proceeded on regardless.

Far-Reaching Theological Implications

There is no getting away from the fact that the three-fold confirmation of the impossibility of spontaneous generation by respected scientists working independently of each other in different centuries brought with it far-reaching theological implications. For if natural processes could not account for life’s origins, then the only alternative would be a superior force standing outside and above nature but with the power to initiate nature’s processes. The three distinguished scientists were in effect and by implication ruling out any theory for the origin of life bar that of supranatural creation. So it was hardly surprising that there emerged in later time a reaction against their “triple lock” on the issue.

In what was shaping up to become the largely post-Christian 20th century in Europe, the untenability of the abiogenesis postulate was resisted by many in the scientific world on purely ideological grounds. The accelerating secularizing trends of the early 20th century meant that the outdated and disproven notion of spontaneous generation was nevertheless kept alive on a form of intellectual life-support despite the abundant evidence pointing to its unviability.

For presently both the Russian biologist Alexander Oparin and the British scientist John Haldane stepped forward to revive the idea in the 1920s. The formal experiment to investigate the possibility of spontaneous generation had then to wait a few decades more before the bespoke procedure to test its viability in laboratory conditions was announced by the distinguished team of Miller and Urey of the University of Chicago in 1953. Clearly the unspoken hope behind this now (in)famous experiment was the possibility that Pasteur, Harvey, and Redi might have been wrong to impose their “triple lock” and that mid 20th-century advances might discover a solution where predecessors had failed. If ever there was an attempt to impose a social/ideological construction of reality on science in line with materialist thinking, this was it.

Next, “Imagining ‘Abiogenesis’: Crick, Watson, and Franklin.”

Notes

- For the reception of Darwin in Germany, see Alfred Kelly, The Descent of Darwin: The Popularization of Darwin in Germany, 1860-1914 (Chapel Hill: North Carolina UP, 1981).

And still yet even more on why I.D is already mainstream.

SETI Activists Still Don’t Get the Irony

SETI is on a roll again. The Search for Extra-Terrestrial Intelligence oscillates in popularity although it has rumbled on since the 1970s like a carrier tone, waiting for a spike to stand out above the cosmic noise. Instrument searches are largely automated these days. Once in a while somebody raises the subject of SETI above the hum of scientific news. The principal organization behind SETI has been busily humming in the background but now has a message to broadcast.

The SETI Institute announced that the Very Large Array (VLA) in New Mexico has been outfitted to stream data for “technosignature research.” Technosignatures are the new buzzword in SETI. Unlike the old attempts to detect meaningful messages like How to Serve Man, the search for technosignatures involves looking for “signs of technology not caused by natural phenomena.” Hold that thought for later.

COSMIC SETI (the Commensal Open-Source Multimode Interferometer Cluster Search for Extraterrestrial Intelligence) took a big step towards using the National Science Foundation’s Karl G. Jansky Very Large Array (VLA) for 24/7 SETI observations. Fiber optic amplifiers and splitters are now installed for all 27 VLA antennas, giving COSMIC access to a complete and independent copy of the data streams from the entire VLA. In addition, the COSMIC system has used these links to successfully acquire VLA data, and the primary focus now is on developing the high-performance GPU (Graphical Processing Unit) code for analyzing data for the possible presence of technosignatures. [Emphasis added.]

A “Golden Fleece Award”

Use of government funding for SETI has been frowned on ever since Senator William Proxmire gave it his infamous “Golden Fleece Award” in 1979, and got it cancelled altogether three years later. The SETI Institute learned from that shaming incident to conceal its aims in more recondite jargon, and “technosignatures” fills the bill nicely. So how did they succeed in getting help from the National Radio Astronomy Observatory (NRAO) to use a government facility? Basically, it’s just a data sharing arrangement. COSMIC will not interfere with the VLA’s ongoing work but will tap into the data stream. With access to 82 dishes each 25 meters linked by interferometry, this constitutes a data bonanza for the SETI Institute — the next best thing to Project Cyclops that riled Proxmire with its proposed 1,000 dishes costing half a billion dollars back at a time when a billion dollars was real money.

Another method that the SETI Institute is employing is looking for laser pulses over wide patches of the night sky. Last year, the institute announced progress in installing a second LaserSETI site at the Haleakala Observatory in Hawaii with the cooperation of the University of Hawaii. The first one is at Robert Ferguson Observatory in Sonoma, California. No tax dollars are being spent on these initiatives.

Initial funding for LaserSETI was raised through a crowdfunding campaign in 2017, with additional financing provided through private donations. The plan calls for ten more instruments deployed in Puerto Rico, the Canary Islands, and Chile. When this phase is complete, the system will be able to monitor the nighttime sky in roughly half of the western hemisphere.

Unprecedented Searches

This brings up another reason for growing SETI news: technological advancements are making possible unprecedented searches. “Each LaserSETI device consists of two identical cameras rotated 90 degrees to one another along the viewing axis,” they say. “They work by using a transmission grating to split light sources up into spectra, then read the camera out more than a thousand times per second.” This optical form of search differs from the traditional radio wave searches of the past, and is once again a hunt for technosignatures.

Writing for Universe Today, Evan Gough connected the search for biosignatures, such as microbes being sought by Mars Rovers, with technosignatures being sought by the SETI Institute.

The search for biosignatures is gaining momentum. If we can find atmospheric indications of life at another planet or moon — things like methane and nitrous oxide and a host of other chemical compounds — then we can wonder if living things produced them. But the search for technosignatures raises the level of the game. Only a technological civilization can produce technosignatures.

NASA has long promoted the search for biosignatures. Its Astrobiology programs that began with the Mars Meteorite in 1997 have continued despite later conclusions that the structures in the rock were abiotic. In the intervening years, astrobiology projects have been deemed taxpayer worthy, but SETI projects have not. That may be changing. Marina Koen wrote for The Atlantic in 2018 that the search for technosignatures has gained a little support in Congress, boosted by the discovery of thousands of exoplanets from the Kepler Mission. SETI Institute’s senior astronomer Seth Shostak has become friends with one congressman.

“Kepler showed us that planets are as common as cheap motels, so that was a step along the road to finding other life because at least there’s the real estate,” says Shostak. “That doesn’t mean there’s any life there, but at least there are planets.”

Decadal Survey on Astronomy

Gough mentions the Decadal Survey on Astronomy, named Astro2020, that was released in 2021 from the National Academies of Sciences (NAS). It contained initiatives that could overlap astrobiology with SETI by extending searches for biosignatures to searches for technosignatures. Worded that way, they don’t seem that far apart. One white paper specifically linked the two:

The Astro2020 report outlines numerous recommendations that could significantly advance technosignature science. Technosignatures refer to any observable manifestations of extraterrestrial technology, and the search for technosignatures is part of the continuum of the astrobiological search for biosignatures (National Academies of Sciences 2019a,b). The search for technosignatures is directly relevant to the “World and Suns in Context” theme and “Pathways to Habitable Worlds” program in the Astro2020 report. The relevance of technosignatures was explicitly mentioned in “E1 Report of the Panel on Exoplanets, Astrobiology, and the Solar System,” which stated that “life’s global impacts on a planet’s atmosphere, surface, and temporal behavior may therefore manifest as potentially detectable exoplanet biosignatures, or technosignatures” and that potential technosignatures, much like biosignatures, must be carefully analyzed to mitigate false positives. The connection of technosignatures to this high-level theme and program can be emphasized, as the report makes clear the purpose is to address the question “Are we alone?” This question is also presented in the Explore Science 2020-2024 plan1 as a driver of NASA’s mission.

The most likely technosignature that could be seen at stellar distances, unfortunately for the SETI enthusiasts, would have to be on the scale of a Dyson Sphere: a theoretical shield imagined by Freeman Dyson that collects all the energy from a dying star by a desperate civilization trying to preserve itself from a heat death (see the graphic in Gough’s article). The point is that such a “massive engineering structure” would require the abilities of intelligent beings with foresight and planning much grander than ours.

Hunting for technosignatures is less satisfying than “Contact” — it lacks the relationship factor. It’s like eavesdropping instead of conversing. We can only wonder what kind of beings would make such things. Maybe the signatures are like elaborate bird nests, interesting but instinctive. Worse, maybe the signatures have a natural explanation we don’t yet understand.

A unique feature of intelligent life, SETI enthusiasts often assume, is the desire to communicate. We’ll explore that angle of SETI next time.

Friday 8 April 2022

Addressing Darwinist just so stories on eye evolution.

More Implausible Stories about Eye Evolution

Recently an email correspondent asked me about a clip from Neil deGrasse Tyson’s reboot of Cosmos where he claims that eyes could have evolved via unguided mutations. Even though the series is now eight years old, it’s still promoting implausible stories about eye evolution. Clearly, despite having been addressed by proponents of intelligent design many times over, this issue is not going away. Let’s revisit the question, as Tyson and others have handled it.

In the clip, Tyson claims that the eye is easily evolvable by natural selection and it all started when some “microscopic copying error” created a light-sensitive protein for a lucky bacterium. But there’s a problem: Creating a light-sensitive protein wouldn’t help the bacterium see anything. Why? Because seeing requires circuitry or some kind of a visual processing pathway to interpret the signal and trigger the appropriate response. That’s the problem with evolving vision — you can’t just have the photon collectors. You need the photon collectors, the visual processing system, and the response-triggering system. At the very least three systems are required for vision to give you a selective advantage. It would be prohibitively unlikely for such a set of complex coordinated systems to evolve by stepwise mutations and natural selection.

A “Masterpiece” of Complexity

Tyson calls the human eye a “masterpiece” of complexity, and claims it “poses no challenge to evolution by natural selection.” But do we really know this is true?

Darwinian evolution tends to work fine when one small change or mutation provides a selective advantage, or as Darwin put it, when an organ can evolve via “numerous, successive, slight modifications.” If a structure cannot evolve via “numerous, successive, slight modifications,” Darwin said, his theory “would absolutely break down.” Writing in The New Republic some years ago, evolutionist Jerry Coyne essentially concurred on that: “It is indeed true that natural selection cannot build any feature in which intermediate steps do not confer a net benefit on the organism.” So are there structures that would require multiple steps to provide an advantage, where intermediate steps might not confer a net benefit on the organism? If you listen to Tyson’s argument carefully, I think he let slip that there are.

Tyson says that “a microscopic copying error” gave a protein the ability to be sensitive to light. He doesn’t explain how that happened. Indeed, biologist Sean B. Carroll cautions us to “not be fooled” by the “simple construction and appearance” of supposedly simple light-sensitive eyes, since they “are built with and use many of the ingredients used in fancier eyes.” Tyson doesn’t worry about explaining how any of those complex ingredients arose at the biochemical level. What’s more interesting is what Tyson says next: “Another mutation caused it [a bacterium with the light-sensitive protein] to flee intense light.”

An Interesting Question

It’s nice to have a light-sensitive protein, but unless the sensitivity to light is linked to some behavioral response, then how would the sensitivity provide any advantage? Only once a behavioral response also evolved — say, to turn towards or away from the light — can the light-sensitive protein provide an advantage. So if a light-sensitive protein evolved, why did it persist until the behavioral response evolved as well? There’s no good answer to that question, because vision is fundamentally a multi-component, and thus a multi-mutation, feature. Multiple components — both visual apparatus and the encoded behavioral response — are necessary for vision to provide an advantage. It’s likely that these components would require many mutations. Thus, we have a trait where an intermediate stage — say, a light-sensitive protein all by itself — would not confer a net advantage on the organism. This is where Darwinian evolution tends to get stuck.

Tyson seemingly assumes those subsystems were in place, and claims that a multicell animal might then evolve a more complex eye in a stepwise fashion. He says the first step is that a “dimple” arises which provides a “tremendous advantage,” and that dimple then “deepens” to improve visual acuity. A pupil-type structure then evolves to sharpen the focus, but this results in less light being let in. Next, a lens evolves to provide “both brightness and sharp focus.” This is the standard account of eye evolution that I and others have critiqued before. Francis Collins and Karl Giberson, for example, have made a similar set of arguments.

Such accounts invoke the abrupt appearance of key features of advanced eyes including the lens, cornea, and iris. The presence of each of these features — fully formed and intact — would undoubtedly increase visual acuity. But where did the parts suddenly come from in the first place? As Scott Gilbert of Swarthmore College put it, such evolutionary accounts are “good at modelling the survival of the fittest, but not the arrival of the fittest.”

Hyper-Simplistic Accounts

As a further example of these hyper-simplistic accounts of eye evolution, Francisco Ayala in his book Darwin’s Gift to Science and Religion asserts, “Further steps — the deposition of pigment around the spot, configuration of cells into a cuplike shape, thickening of the epidermis leading to the development of a lens, development of muscles to move the eyes and nerves to transmit optical signals to the brain — gradually led to the highly developed eyes of vertebrates and cephalopods (octopuses and squids) and to the compound eyes of insects.” (p. 146)

Ayala’s explanation is vague and shows no appreciation for the biochemical complexity of these visual organs. Thus, regarding the configuration of cells into a cuplike shape, biologist Michael Behe asks (in responding to Richard Dawkins on the same point):

And where did the “little cup” come from? A ball of cells–from which the cup must be made–will tend to be rounded unless held in the correct shape by molecular supports. In fact, there are dozens of complex proteins involved in maintaining cell shape, and dozens more that control extracellular structure; in their absence, cells take on the shape of so many soap bubbles. Do these structures represent single-step mutations? Dawkins did not tell us how the apparently simple “cup” shape came to be.

Michael J. Behe, Darwin’s Black Box: The Biochemical Challenge to Evolution, p. 15 (Free Press, 1996)

An Integrated System

Likewise, mathematician and philosopher David Berlinski has assessed the alleged “intermediates” for the evolution of the eye. He observes that the transmission of data signals from the eye to a central nervous system for data processing, which can then output some behavioral response, comprises an integrated system that is not amenable to stepwise evolution:

Light strikes the eye in the form of photons, but the optic nerve conveys electrical impulses to the brain. Acting as a sophisticated transducer, the eye must mediate between two different physical signals. The retinal cells that figure in Dawkins’ account are connected to horizontal cells; these shuttle information laterally between photoreceptors in order to smooth the visual signal. Amacrine cells act to filter the signal. Bipolar cells convey visual information further to ganglion cells, which in turn conduct information to the optic nerve. The system gives every indication of being tightly integrated, its parts mutually dependent.

The very problem that Darwin’s theory was designed to evade now reappears. Like vibrations passing through a spider’s web, changes to any part of the eye, if they are to improve vision, must bring about changes throughout the optical system. Without a correlative increase in the size and complexity of the optic nerve, an increase in the number of photoreceptive membranes can have no effect. A change in the optic nerve must in turn induce corresponding neurological changes in the brain. If these changes come about simultaneously, it makes no sense to talk of a gradual ascent of Mount Improbable. If they do not come about simultaneously, it is not clear why they should come about at all.

The same problem reappears at the level of biochemistry. Dawkins has framed his discussion in terms of gross anatomy. Each anatomical change that he describes requires a number of coordinate biochemical steps. “[T]he anatomical steps and structures that Darwin thought were so simple,” the biochemist Mike Behe remarks in a provocative new book (Darwin’s Black Box), “actually involve staggeringly complicated biochemical processes.” A number of separate biochemical events are required simply to begin the process of curving a layer of proteins to form a lens. What initiates the sequence? How is it coordinated? And how controlled? On these absolutely fundamental matters, Dawkins has nothing whatsoever to say.

David Berlinski, “Keeping an Eye on Evolution: Richard Dawkins, a Relentless Darwinian Spear Carrier, Trips Over Mount Improbable,” Globe & Mail (November 2, 1996)

More or Less One Single Feature

In sum, standard accounts of eye evolution fail to explain the evolution of key eye features such as:

- The biochemical evolution of the fundamental ability to sense light

- The origin of the first “light-sensitive spot”

- The origin of neurological pathways to transmit the optical signal to a brain

- The origin of a behavioral response to allow the sensing of light to give some behavioral advantage to the organism

- The origin of the lens, cornea, and iris in vertebrates

- The origin of the compound eye in arthropods

At most, accounts of the evolution of the eye provide a stepwise explanation of “fine gradations” for the origin of more or less one single feature: the increased concavity of eye shape. That does not explain the origin of the eye. But from Neil Tyson and the others, you’d never know that.

Against the stigma of the skilled trades.

The Stigma of Choosing Trade School Over College

When college is held up as the one true path to success, parents—especially highly educated ones—might worry when their children opt for vocational school instead.

Toren Reesman knew from a young age that he and his brothers were expected to attend college and obtain a high-level degree. As a radiologist—a profession that requires 12 years of schooling—his father made clear what he wanted for his boys: “Keep your grades up, get into a good college, get a good degree,” as Reesman recalls it. Of the four Reesman children, one brother has followed this path so far, going to school for dentistry. Reesman attempted to meet this expectation, as well. He enrolled in college after graduating from high school. With his good grades, he got into West Virginia University—but he began his freshman year with dread. He had spent his summers in high school working for his pastor at a custom-cabinetry company. He looked forward each year to honing his woodworking skills, and took joy in creating beautiful things. School did not excite him in the same way. After his first year of college, he decided not to return.

He says pursuing custom woodworking as his lifelong trade was disappointing to his father, but Reesman stood firm in his decision, and became a cabinetmaker. He says his father is now proud and supportive, but breaking with family expectations in order to pursue his passion was a difficult choice for Reesman—one that many young people are facing in the changing job market.

Traditional-college enrollment rates in the United States have risen this century, from 13.2 million students enrolled in 2000 to 16.9 million students in 2016. This is an increase of 28 percent, according to the National Center for Education Statistics. Meanwhile, trade-school enrollment has also risen, from 9.6 million students in 1999 to 16 million in 2014. This resurgence came after a decline in vocational education in the 1980s and ’90s. That dip created a shortage of skilled workers and tradespeople.

Many jobs now require specialized training in technology that bachelor’s programs are usually too broad to address, leading to more “last mile”–type vocational-education programs after the completion of a degree. Programs such as Galvanize aim to teach specific software and coding skills; Always Hired offers a “tech-sales bootcamp” to graduates. The manufacturing, infrastructure, and transportation fields are all expected to grow in the coming years—and many of those jobs likely won’t require a four-year degree.

/media/img/posts/2019/03/Screen_Shot_2019_03_06_at_11.54.12_AM/original.png)

This shift in the job and education markets can leave parents feeling unsure about the career path their children choose to pursue. Lack of knowledge and misconceptions about the trades can lead parents to steer their kids away from these programs, when vocational training might be a surer path to a stable job.

Raised in a family of truck drivers, farmers, and office workers, Erin Funk was the first in her family to attend college, obtaining a master’s in education and going on to teach second grade for two decades. Her husband, Caleb, is a first-generation college graduate in his family, as well. He first went to trade school, graduating in 1997, and later decided to strengthen his résumé following the Great Recession. He began his bachelor’s degree in 2009, finishing in 2016. The Funks now live in Toledo, Ohio, and have a 16-year-old son, a senior in high school, who is already enrolled in vocational school for the 2019–20 school year. The idea that their son might not attend a traditional college worried Erin and Caleb at first. “Vocational schools where we grew up seemed to be reserved for people who weren’t making it in ‘real’ school, so we weren’t completely sure how we felt about our son attending one,” Erin says. Both Erin and Caleb worked hard to be the first in their families to obtain college degrees, and wanted the same opportunity for their three children. After touring the video-production-design program at Penta Career Center, though, they could see the draw for their son. Despite their initial misgivings, after learning more about the program and seeing how excited their son was about it, they’ve thrown their support behind his decision.

But not everyone in the Funks’ lives understands this decision. Erin says she ran into a friend recently, and “as we were catching up, I mentioned that my eldest had decided to go to the vocational-technical school in our city. Her first reaction was, ‘Oh, is he having problems at school?’ I am finding as I talk about this that there is an attitude out there that the only reason you would go to a vo-tech is if there’s some kind of problem at a traditional school.” The Funks’ son has a 3.95 GPA. He was simply more interested in the program at Penta Career Center. “He just doesn’t care what anyone thinks,” his mom says.

The Funks are not alone in their initial gut reaction to the idea of vocational and technical education. Negative attitudes and misconceptions persist even in the face of the positive statistical outlook for the job market for these middle-skill careers. “It is considered a second choice, second-class. We really need to change how people see vocational and technical education,” Patricia Hsieh, the president of a community college in the San Diego area, said in a speech at the 2017 conference for the American Association of Community Colleges. European nations prioritize vocational training for many students, with half of secondary students (the equivalent of U.S. high-school students) participating in vocational programs. In the United States, since the passage of the 1944 GI Bill, college has been pushed over vocational education. This college-for-all narrative has been emphasized for decades as the pathway to success and stability; parents might worry about the future of their children who choose a different path.

Read more: The world might be better off without college for everyone

Dennis Deslippe and Alison Kibler are both college professors at Franklin and Marshall College in Lancaster, Pennsylvania, so it was a mental shift for them when, after high school, their son John chose to attend the masonry program at Thaddeus Stevens College of Technology, a two-year accredited technical school. John was always interested in working with his hands, Deslippe and Kibler say—building, creating, and repairing, all things that his academic parents are not good at, by their own confession.

Deslippe explains, “One gap between us as professor parents and John’s experience is that we do not really understand how Thaddeus Stevens works in the same way that we understand a liberal-arts college or university. We don’t have much advice to give. Initially, we needed some clarity about what masonry exactly was. Does it include pouring concrete, for example?” (Since their son is studying brick masonry, his training will likely not include concrete work.) Deslippe’s grandfather was a painter, and Kibler’s grandfather was a woodworker, but three of their four parents were college grads. “It’s been a long-standing idea that the next generation goes to college and moves out of ‘working with your hands,’” Kibler muses. “Perhaps we are in an era where that formula of rising out of trades through education doesn’t make sense?”

College doesn’t make sense is the message that many trade schools and apprenticeship programs are using to entice new students. What specifically doesn’t make sense, they claim, is the amount of debt many young Americans take on to chase those coveted bachelor’s degrees. There is $1.5 trillion in student debt outstanding as of 2018, according to the Federal Reserve. Four in 10 adults under the age of 30 have student-loan debt, according to the Pew Research Center. Master’s and doctorate degrees often lead to even more debt. Earning potential does not always offset the cost of these loans, and only two-thirds of those with degrees think that the debt was worth it for the education they received. Vocational and technical education tends to cost significantly less than a traditional four-year degree.

This stability is appealing to Marsha Landis, who lives with her cabinetmaker husband and two children outside of Jackson Hole, Wyoming. Landis has a four-year degree from a liberal-arts college, and when she met her husband while living in Washington, D.C., she found his profession to be a refreshing change from the typical men she met in the Capitol Hill dating scene. “He could work with his hands, create,” she says. “He wasn’t pretentious and wrapped up in the idea of degrees. And he came to the marriage with no debt and a marketable skill, something that has benefited our family in huge ways.” She says that she has seen debt sink many of their friends, and that she would support their children if they wanted to pursue a trade like their father.

In the United States, college has been painted as the pathway to success for generations, and it can be, for many. Many people who graduate from college make more money than those who do not. But the rigidity of this narrative could lead parents and students alike to be shortsighted as they plan for their future careers. Yes, many college graduates make more money—but less than half of students finish the degrees they start. This number drops as low as 10 percent for students in poverty. The ever sought-after college-acceptance letter isn’t a guarantee of a stable future if students aren’t given the support they need to complete a degree. If students are exposed to the possibility of vocational training early on, that might help remove some of the stigma, and help students and parents alike see a variety of paths to a successful future.