New Paper Investigates Engineering Design Constraints on the Bacterial Flagellum

- Casey Luskin

- What is the overall needed function? Answer: “First, the system should enable a bacterium to sense and move toward nutrients needed for metabolic energy, self-repair, and reproduction. Second, the system should enable its bacterium to sense and escape hostile locales, such as toxic or noxious material.”

- What is the environment of the flagellum? Answer: “The environment of a typical bacterium generally may include both nutrients and deleterious substances. Further, the bacterium typically is suspended within a liquid or semi-fluid medium.”

- “the propulsion subsystem needs a source of power to operate”

- “there must be a power-to-motion transducer”

- “there must be sensors to detect whether the propulsion system should move the bacterium forward … there must be some external member physically interacting with the environmental medium containing the bacterium.”

A new peer-reviewed paper in the journal BIO-Complexity, “An Engineering Perspective on the Bacterial Flagellum: Part 1 — Constructive View,” comes out of the Engineering Research Group and Conference on Engineering in Living Systems that Steve Laufmann recently wrote about. The author, Waldean Schulz, holds a PhD in computer science from Colorado State University, and is a signer of the Scientific Dissent from Darwinism list. What could a computer scientist say about the bacterial flagellum? Well, Schulz explains that his study “examines the bacterial flagellum from an engineering viewpoint,” which aims to concentrate on the “the structure, proteins, control, and assembly of a typical flagellum, which is the organelle imparting motility to common bacteria.”

This technique of examining biology through the eyes of engineering is not necessarily new — systems biologists have been doing it for years. However, since engineering is a field that tries to determine how to better design technology, the field of intelligent design promises to yield new engineering-based insights into biology. Schulz’s paper is a prime example of such a contribution. It produces what is arguably the most rigorous logical demonstration of the irreducible complexity of the flagellum produced to date.

A Goal-Directed Approach

Intelligent design is fundamentally a goal-directed approach to studying natural systems, where the various parts and components biological organisms are coordinated to work together in the top-down manner of engineering. Schulz’s paper thus takes a “constructive approach” which requires a “top-down specification.” Here’s how this approach works:

It starts with specifying the purpose of a bacterial motility organelle, the environment of a bacterium, its existing resources, its existing constitution, and its physical limits, all within the relevant aspects of physics and molecular chemistry. From that, the constructive approach derives the logically necessary functional requirements, the constraints, the assembly needs, and the hierarchical relationships within the functionality. The functionality must include a control subsystem, which needs to properly direct the operation of a propulsion subsystem. Those functional requirements and constraints then suggest a few — and only a few — viable implementation schemata for a bacterial propulsion system. The entailed details of one configuration schema are then set forth.

This approach is very similar to Paul Nelson’s ideas about “design triangulation”: you identify some function that is needed, and if the system was intelligently designed then you can back-engineer other components and parts that will be needed for that function to be fulfilled. After all, “engineers regularly specify and design systems top-down, but they construct those systems bottom-up.” Thus an engineering analysis of the flagellum seems the best way to understand it.

Schulz introduces engineering methodologies to study the flagellum, which flow naturally from of an ID paradigm. He writes:

A common engineering methodology, called the Waterfall Model, first produces a formal Functional Requirements Specification document. Then a design is proposed in a System Design Specification, which must comport with the Requirements Spec. Typically this methodology is often accompanied by a Testing Specification, which measures how well the subsequently constructed system satisfies the requirements. This methodology was and is successfully applied at Intel, Image Guided Technologies, and Stryker. A similar specification method can be used by a patent agent or attorney in helping inventors clarify in detail what they have invented for a patent application.

When applying this method, one examines “overall purpose for the proposed system, the usage environment, necessary functionality, available materials, tools needed for construction, and various parameters and constraints (dimensions, form, cost, materials, energy needs, timing, costs, and other conditions).” After doing this, “a design is proposed that logically comports with those requirements.”

What Are the Requirements for Bacterial Motility?

Schulz then applies this method to the flagellum, asking What are the requirements for bacterial motility? “In doing this,” he notes, “the constructive approach becomes — in effect — the engineering documentation that must be written as if a clever bioengineer were tasked to devise a motility system for a bacterium lacking a motive organelle.” He answers various questions outlined by the “Waterfall Model”:

Thinking Like an Engineer

Schulz thus determines that the system requires “a propulsion subsystem to accomplish motion.” It also requires “some form of primitive redirection subsystem, working in concert with or integrated with the propulsion subsystem” to help the bacterium find nutrients. There must also be a “collateral control subsystem” which can “sense favorable or unfavorable substances.” He outlines logic controls of this system — including signals that indicate the bacterium should proceed “full speed ahead” or “flee and redirect.”

The response time for these signals and the motility speeds they induce must also be appropriate to fulfill the needed functions. However, the speeds should be appropriate. For example, “A substantially faster speed would be wasteful of energy,” and “The energy cost to operate the propulsion subsystem must be less than the energy obtained by navigating to and consuming nutrients.” And there are also assembly constraints — including that “The material resources and energy requirements to build a propulsion system must be low enough to justify its construction — that is, to justify the benefit of motion to find new nutrients for metabolism.”

An Irreducibly Complex System

He notes that all of these resulting requirements present us with an irreducibly complex system:

[T]he goal is to specify only a minimal set of requirements, assuring that all the requirements of the subsystem are essential. That would imply that the specified sensory-propulsion-redirection system is effectively irreducible. That is, if some part is missing or defective, then, at best, there would be noticeably diminished motility, if any.

Schulz then proposes a design for the bacterial flagellum to fulfill these requirements of flagellar motility. Some of the following requirements must be met:

There must also be various assembly requirements. Construction materials could include a variety of potential biomolecules, including sugars, RNA, DNA, nucleotide bases, or proteins. The answer is clear: “an obvious generic requirement of using available fabrication tools, templates, and control effectively rules out the use of other materials, such as sugars or non-protein polymers.”

There are a variety of potential basic designs for propulsion. One is a jet-like nozzle (which would require a bladder and many more parts). Another is a rhythmic flexing (which would require a long, flexible body and much more). Still another is a leg-like appendage (which would require appendages), or a snake-like caterpillar crawl (again requiring a long flexible body), or a helical propeller. Schulz explores what would be necessary for a helical propeller — the actual design of bacterial flagella. He discusses various needed parts — including “an armature or mounting structure, a motor rotor, a drive shaft of appropriate length, a helical propeller, and possibly adaptors to bind those components together.” Further, he notes, “there need to be bearings and seals between the rotary components and static components.” Here’s a larger description of the needs:

The static subassembly requires the following components: the semi-rigid cell membrane(s) for rigid mounting, a motor stator, multiple sealed bearings where the rotary subassembly penetrates cell membranes, and an energy conduction pathway.

The stator together with the motor rotor produces torque. The stator must be rigidly attached to some or all of the bacterium’s inner and outer membranes and the peptidoglycan layer. The rigid attachment transfers necessary counter-torque to the cell body as well as providing stability for the rotary subassembly. For each membrane or layer the drive shaft penetrates, there must be a bearing. Each bearing must (a) stabilize the drive shaft, (b) provide a low-friction contact with the drive shaft, and (c) provide a seal to prevent movement of molecules past where the shaft penetrates its host membrane or layer.

A Dependency Network

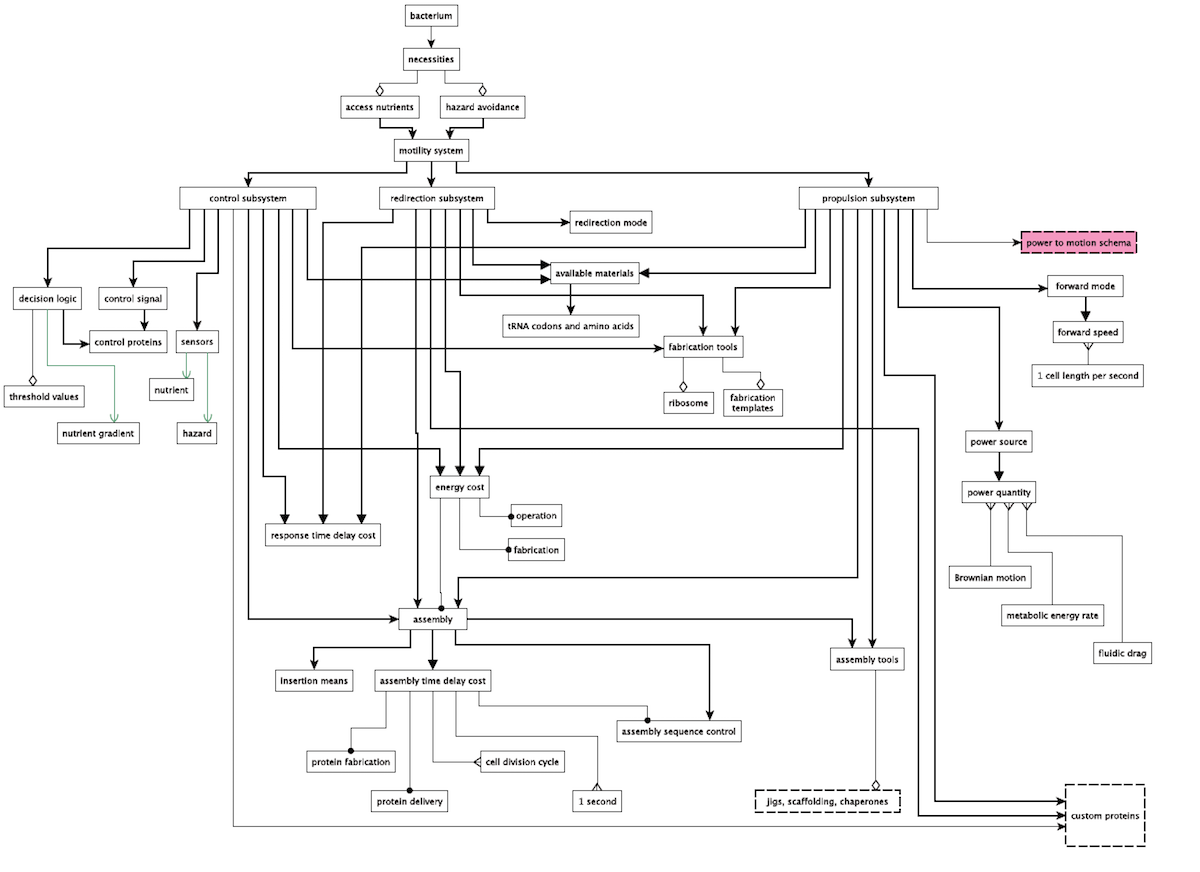

Schulz finally develops a dependency network for these requirement showing their “interdependency relationships” which addresses all of the above constraints, including the “purpose, environment, required functions, constraints, and the logically implied static, structural requirements.” It’s quite a detailed diagram — here it is — it’s a bit large so click here for a full-resolution version:

In an impressive table, Schulz lists all of the different components and properties of the flagellum and the rationale for their inclusion. He notes that although there are a couple of different ways to build the system, “In either case, the specified bacterial motility system would be irreducibly complex” and that the “intricate coherence” of all of the parts, systems, and design requirements of the flagellum “is essentially irreducible.” He concludes:

Current evolutionary biology proposes that the flagellum could have been “engineered” naturalistically by cumulative mutations, by horizontal gene transfer, by gene duplication, by co-option of existing organelles, by self-organization, or by some combination thereof. See the summary and references by Finn Pond. Yet to date, no scenario in substantive detail exists for how such an intricate propulsion system could have evolved naturalistically piece by piece. Can any partial implementation of a motility system be even slightly advantageous to a bacterium? Examples of a partial system might lack sensors, lack decision logic, lack control messages, lack a rotor or stator, lack sealed bearings, lack a rod, lack a propeller, or lack redirection means. Would such partial systems be preserved long enough for additional cooperating components to evolve?

That is the key question — which will be explored in future papers that Schulz aims to publish. Based upon the “intricate coherence” and “irreducible complexity” of the numerous parts and properties of the flagellum, the answers to these questions would seem to be no